Code as a Liberal Art, Spring 2021

Unit 2, Exercise 2 lesson — Wednesday, March 3

Web Scraping, Web Crawling, Network Analysis

Last week we started talking about data structures: the various types of models and forms for organizing data in computer programs. We looked at three of the main data structures in Python: lists, tuples, and dictionaries.

Remember that we talked about how lists (Python docs) are particularly well-suited for storing sequences of data, in cases where the order is important. Lists can grow dynamically, can easily become very large, can be sorted and resorted in different orders, and can easily have elements added or removed from anywhere — beginning, middle, or end. You reference a specific item in a list by its index, and importantly, lists are always indexed numerically, using numbers in square brackets.

Here's a refresher on some common list operations:

>>> new_list = [ "a", "b", "c" ]

>>> new_list

['a', 'b', 'c']

>>> len(new_list)

3

>>> new_list.append("d")

>>> new_list

['a', 'b', 'c', 'd']

>>> new_list.remove("a")

>>> new_list

['b', 'c', 'd']

>>> new_list.insert(0,"z")

>>> new_list

['z', 'b', 'c', 'd']

>>> new_list.sort()

>>> new_list

['b', 'c', 'd', 'z']

>>> last_value = new_list.pop()

>>> last_value

'z'

>>> new_list

['b', 'c', 'd']

Note that I've shown a new list operation

here: pop(). This removes the last value from a

list and returns that value — in other

words, it allows you to set a variable to that value with

the assignment operator: =.

We also talked about tuples

(Python

docs), named in reference to the idea of a pair, triple,

quadruple, quintuple, sextuple, or etc ... From the name

"n-tuple". Like lists, tuples store sequences of values in

order. Python actually refers to both lists

and tuples as sequences

(Python

docs), which is why they can both be indexed numerically

with numbers in square brackets. But unlike lists, tuples are

not dynamic. In fact they are what's called

immutable: their values cannot change. They

cannot be sorted or resorted, you cannot add or remove values

from them. Tuples can be used for things like the x,y values of

the pixel in a digital image, or perhaps information identifying

a person or thing — the example I gave last class was the

way that a hospital records system might use tuples

like (first_name, last_name, date_of_birth) to

uniquely identify a patient.

Illustrating the limited number of operations that tuples allow:

>>> tup = ("Gritty", "Monsteur", "7-13-1901")

>>> tup

('Gritty', 'Monsteur', '7-13-1901')

>>> tup[1]

'Monsteur'

>>> tup.sort()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

AttributeError: 'tuple' object has no attribute 'sort'

>>> tup.remove("Gritty")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

AttributeError: 'tuple' object has no attribute 'remove'

>>> tup.append("orange")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

AttributeError: 'tuple' object has no attribute 'append'

And lastly, we talked

about dictionaries (Python

docs): collections of key-value

pairs. Dictionaries are unsorted by default, although

you can sort them by key or value if you need to. Dictionaries

are also dynamic and can have keys and values added or removed,

and can easily grow quite large. Unlike lists, dictionaries can

have any arbitrary value as a key, and are referenced by putting

this value in square brackets like an index. Importantly, you

can check if a given key is in a

dictionary.

>>> new_dictionary = { "first_name": "Gritty", "hair": "orange", "species": "monster" }

>>> new_dictionary

{'first_name': 'Gritty', 'hair': 'orange', 'species': 'monster'}

>>> new_dictionary["last_name"] = "Monsteur"

>>> new_dictionary

{ 'first_name': 'Gritty', 'hair': 'orange',

'species': 'monster', 'last_name': 'Monsteur'}

>>> "first_name" in new_dictionary

True

>>> new_dictionary["first_name"]

'Gritty'

>>> name = new_dictionary.pop("first_name")

>>> name

'Gritty'

>>> new_dictionary

{'hair': 'orange', 'species': 'monster', 'last_name': 'Monsteur'}

>>> len(new_dictionary)

3

Information scraping on a Chicago street after the White Sox

won the world series,

2005. (From

Twitter user ryankolak)

Information scraping on a Chicago street after the White Sox

won the world series,

2005. (From

Twitter user ryankolak)

Data scraping

Now that we have this wonderful, rich toolbox of data structures to work with, let's start thinking about some new ways of getting data into them.

One way to do this is a technique known as web scraping, which essentially means using tools like computer programs and scripts to automate the tasks of gathering data from public web sites. I'm not sure the origins of this term, but it is semantically similar to web crawling, a term used to describe what search engines do when they index the web by using algorithms to automatically comb through all websites. It would be interesting to perhaps trace the differing origins of web scraping and web crawling and what types of techno-political work these various terms perform in how we understand these operations and who does them.

Interestingly, there has been recent litigation around the legality of web scraping. University of Michigan legal scholar Christian Sandvig (whose article about sorting we read during the first week of the semester) was one of the plaintiffs in these proceedings.

In my opinion, it is difficult to understand how this could be considered illegal when gathering data in this way is essentially the business model of Google and every search engine or platform that indexes web content. It is almost as if when industry does it, it's called web crawling, but when individuals do it, it's referred to more menacingly as web scraping, and becomes legally challenged.

Scraping tools

To do this work, we're going to need some new tools, in the form

of two new Python libraries: the requests library,

which facilitates the downloading of webpage content from within

Python, and Beautiful Soup, a library that offers utilities for

parsing web pages. Install them at the command line

(not in the Python shell), with the following commands

(or whatever variation of pip worked

for you when installed Pillow):

$ pip install requests $ pip install beautifulsoup4

You'll see some output about "downloading", hopefully a progress

bar, and hopefully a message that it's been "Successfully

installed". If you see a "WARNING" about

your pip version, that does not mean

anything is necessarily wrong. Test that your installation

worked by running the Python shell and typing two commands, like

this:

$ python >>> import requests >>> from bs4 import BeautifulSoup >>>If your shell looks like mine (no output) then that means everything worked.

Downloading a webpage with Python

To get started, let's download the front page of the New York Times website:

>>> import requests

>>> response = requests.get("https://nytimes.com")

>>> response

<Response [200]>

If you see something like this, it means that this worked! The

web is structured around requests

and responses. Usually, you would type a URL or

click on a link in your web browser, and your browser on your

computer would make a request to a program on

another computer called a server. Your

browser's request is specially formatted in accordance

with HTTP: the hypertext transfer

protocol. A web browser and web server are both just

computer programs that are able to encode and decode the rules

of this protocol. When the server receives

a request, it replies with

a response, which your browser receives and

uses to extract the HTML that is rendered to you in your browser

window.

What we've just done here is all of that but without the user-friendly graphical user interface (GUI) of the browser. Sometimes people call this a "headless" mode: doing the work of a GUI application but without the visual display. In effect, we are using Python code as our web browser: making requests, and receiving responses. HTTP specifies that 200 be used for a successfully fulfilled request, which is what you should see in the Python shell. Now we have to figure out what to do with this response.

The response object contains various fields that you can access to learn about the response:

-

response.status_codeis the aforementioned HTTP status code. -

response.headerscontains meta data about the HTTP request such as date, time, encoding, size, etc. -

response.cookiesincludes cookies that the server sent back to you. Normally your browser would save those, which is how websites can track you across the web, but we can ignore them. -

response.elapsedindicates the total time that the server needed to fulfill this request. -

and

response.textis the main payload: the entire content of the response from the server, containing a large amount of plain text, HTML, Javascript, and CSS code.

response.text, that is way

too big. If you want to get a teaser about what this contains,

type the following:

>>> response.text[:500] '<!DOCTYPE html>\n<html lang="en-US" xmlns:og="http://opengraphprotocol.org/schema/"> \n <head>\n <title data-rh="true">The New York Ti ...That is using a strange new Python square bracket indexing notation called a slice, and it returns the first 500 characters of the string. That will show you a somewhat readable bit of the response.

What should we do with this response? One thing we can do is simply save the response to disk, as a file. So far you have worked with files in Python as a way to read text, and as a way to write pixel data to make images. But you can also work with files as a way to write text.

We could save the entire HTML contents, like so:

>>> nytimes = open('FILENAME.html', 'wb')

>>> for chunk in response.iter_content(100000):

... nytimes.write(chunk)

Because of the way that files work, it is better to write the

file to disk in these large segments of data that we called

here chunk, each of 100000 bytes (100KB). Now,

whatever you specified as FILENAME.html will exist

as an HTML file in your current working directory. You can open

that in Finder/Explorer, and double click the file to open it in

your browser.

What else can we do with the response? Well if you wanted to

take human-readable textual content of this response and start

to work with it as you have been doing — for example, to

generate things like word frequency counts, finding largest

words, and other algorithmic processing, you can start working

with response.text. However, as you can see when

you examine a slice of that data, this contains a bunch of HTML

tags, Javascript code, and other meta data that probably

wouldn't be relevant to your textual analysis.

(Unless ... Maybe someone would want to do textual analysis on HTML code, treating it like a human language text, and analyze word lengths, frequencies, etc. Could be an interesting project.)

To start working with the content of this response in a way that focuses on the human-readable textual content and not the HTML code, we can parse the HTML using the Beautiful Soup library. Parsing is the term for dividing up any syntax into constituent elements to determine their individual meanings, and the meaning of the whole. Web browsers parse HTML, and then render that document as a GUI.

As an interesting first parsing operation, let's use Beautiful Soup simply to extract all the plain textual content, stripping out all the HTML:

>>> soup = BeautifulSoup(response.text, "html.parser") >>> plain_text = soup.get_text() >>> plain_text[:500] '\n\n\nThe New York Times - Breaking News, US News, World News and Videos\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nContinue reading the main storySectionsSEARCHSkip to contentSkip to site indexU.S.International CanadaEspañol中文Log inToday’s Paper ...On the first line, we are passing

response.text in

to the BeautifulSoup object. On the next line,

we're telling the BeautifulSoup object, which I

have called soup but you could call anything, to

give us just the text, which I'm setting into a variable

called plain_text, but again, you could call

anything. Now, when I print a slice of the

first 500 characters to the shell, you can see that it has

stripped out all the HTML tags and meta data, giving me just the

text — well, also some strangely encoded characters, and

the spacing is a little messed up, but it looks like something

you could work with. For example, if you look at another slice

of the document, it looks pretty good:

>>> plain_text[1000:1500] 'with Merck to boost production of Johnson & Johnson’s vaccine.President Biden said his administration had provided support to Johnson & Johnson that would enable the U.S. ...We could save this plain text to a file as well if you wish:

>>> nytimes_plain = open('FILENAME.txt', 'w')

>>> nytimes_plain.write(plain_text)

7407

>>> nytimes_plain.close()

Note the .txt file extension to indicate this is

plain text and not HTML. Note also that in open()

I am using 'w' this time instead

of 'wb' as above. Since I'm using a string here

instead of a response object, this other mode of

writing a file is possible and simpler.

Parsing HTML

But Beautiful Soup let's us do much more than just strip out all the HTML tags of a web page, it actually let's us use those HTML tags to select specific parts of the document. Let's use Beautiful Soup to try to pull out the links connecting pages together, and use a data structure to piece together a data representation of the structure of a web site: a data version of a sitemap.

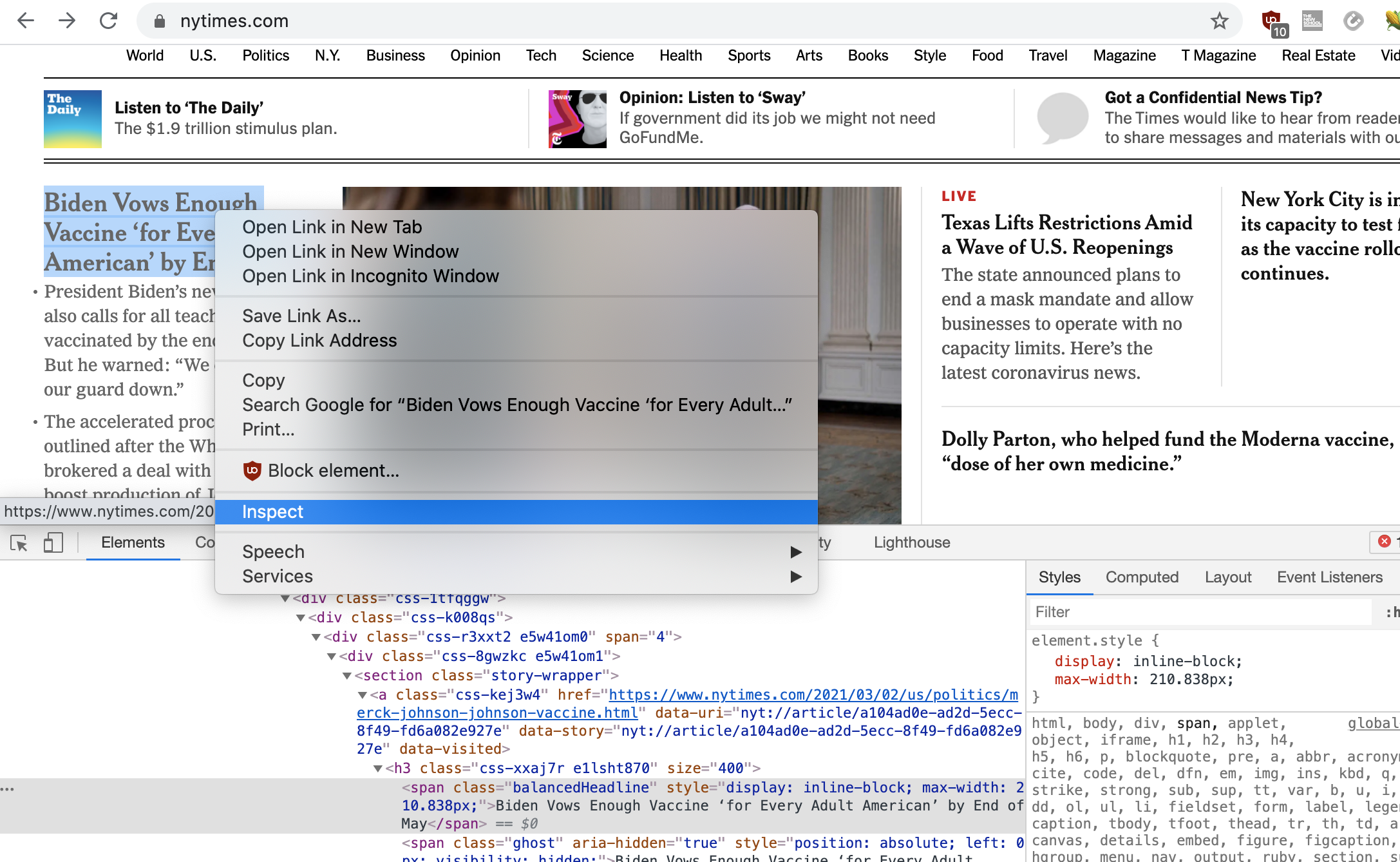

First, before we can do this, we have to poke around a bit in

the structure of the HTML that we want to parse. Open

up nytimes.com in your browser. If you are using

Chrome, you can right-click anywhere in the HTML and select

"Inspect" to view the underlying code of this webpage. (If you

don't have Chrome, you can still do this with any up-to-date

browser, but I can't offer details instructions for each

one. One Firefox for example, it is almost the same as Chrome:

right-click anywhere in the page, and select "Inspect element".)

Doing this will open up a kind of developer console, comprised

of many tools that you can use to disect a webpage, and the

various requests and responses that generated it.

Play around a bit. Right-click on several parts of the page, select "Inspect", and then see what the underlying HTML code is for this section of the document. Also, you can move the mouse pointer around in the "Elements" tab in the developer console, and as you highlight different HTML elements, you should see those highlighted in the GUI area. This can be a very effective way to learn HTML/CSS, and we'll come back to this in the final unit of the semester.

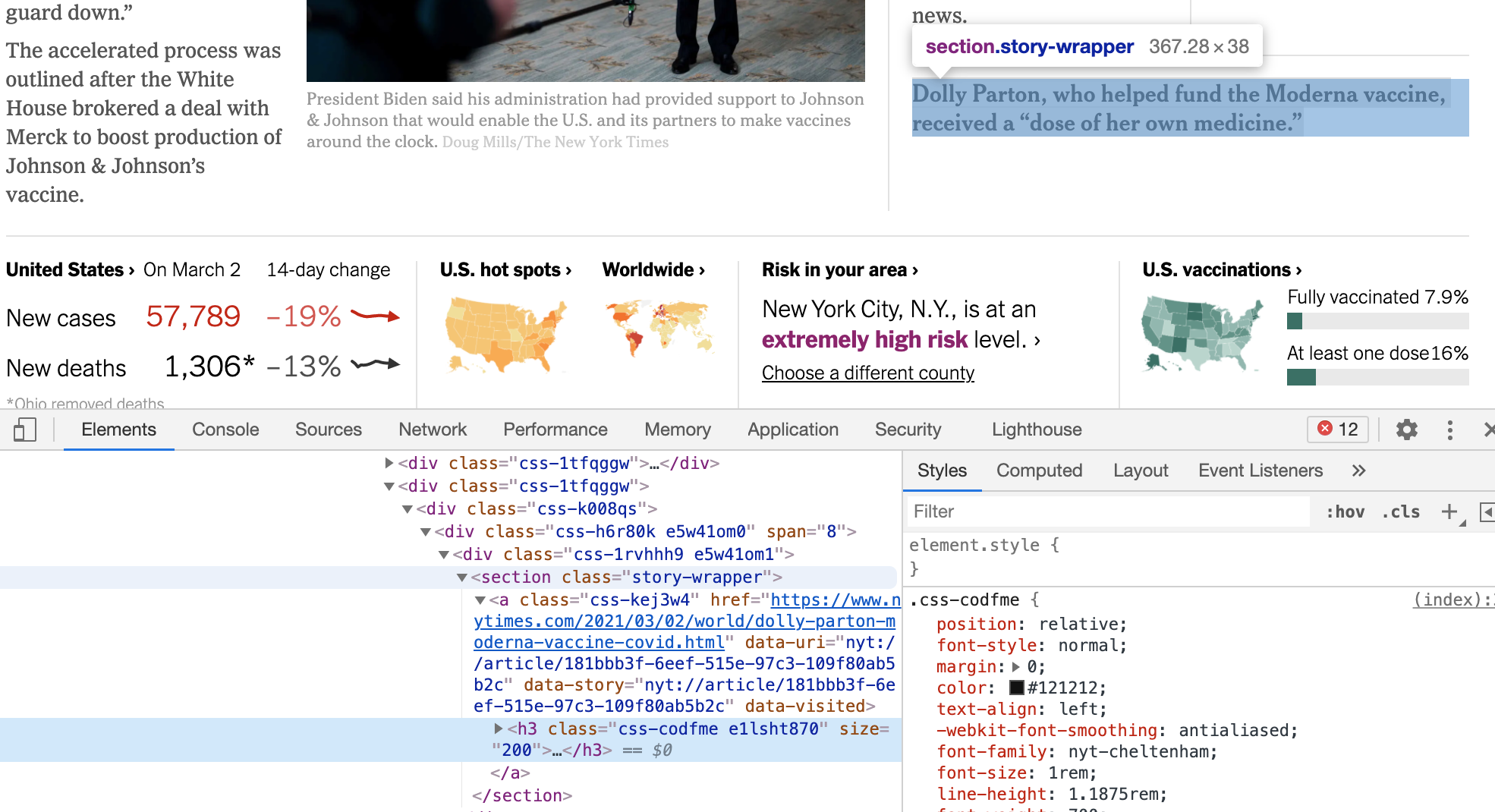

For the current task, let's right-click on some clickable headlines, and see what they look like in HTML. Here's one:

You may already know a little HTML and CSS. Again, we'll come

back to it later in the semester. For this task, suffice it to

say that links between pages are constructed through an HTML

element known as an <a> tag, whose syntax

looks like this:

<a href="http://url-of-this-link" other-properties>Visual text of the link</a>That syntax will display "Visual text of the link" to the user, and when the user clicks that link, it will take them to the URL

http://url-of-this-link.

Looking at the particular headline that I've highlighted above, I can see that it looks like there is an HTML element that looks like this:

<section class="story-wrapper">and adjacent ("inside") that

<section>

element is the <a> tag containing the URL of

this clickable headline. Clicking around, it looks like most of

the clickable headline links follow that same structure.

Beautiful Soup gives us functions in its API specifically for

parsing and retrieving all the elements of an HTML file matching

some criteria, just like what we've identified here. (See the

Beautiful Soup

documentation, "Searching

the tree" section.) Specifically, what we'll do is match all

HTML elements named section that also have

a class property set

to story-wrapper. Beautiful Soup offers

functionality for doing this with

the searching

by class functionality of the find_all()

function. You can use it in the Python shell like this:

>>> sections = soup.find_all("section", class_="story-wrapper")

The variable name sections here is arbitrary and

can be anything you'd like.

Now you could say sections[0] to see the first HTML

element that matches this criteria. The syntax for Beautiful

Soup is quite user-friendly. We know that we want to access

an <a> tag within the section tag, so we can

do that like this:

>>> sections[0].a <a class="css-kej3w4" data-story="nyt://article/a104ad0e-ad2d- ...and if we want to get teh

href property of

that <a> tag, we can do it like this:

>>> sections[0].a["href"] 'https://www.nytimes.com/2021/03/02/us/politics/merck-johnson-joh ...

So what we want to do now is iterate (loop) over all

these <section> HTML elements, contained in

the sections variable, access

the <a> tags inside, and extract the URL from

them. But this is getting complicated. Let's move into

a .py file. Open Atom, create a new file, and let's

put all the aboe together into it:

import requests

from bs4 import BeautifulSoup

response = requests.get("https://nytimes.com")

soup = BeautifulSoup(response.text, "html.parser")

sections = soup.find_all("section", class_="story-wrapper")

Now let's loop over all those sections

import requests

from bs4 import BeautifulSoup

response = requests.get("https://nytimes.com")

soup = BeautifulSoup(response.text, "html.parser")

sections = soup.find_all("section", class_="story-wrapper")

for section in sections:

url = section.a["href"]

OK, well what are we going to do with those URLs? Let's put them into a list, so we can then later go retrieve the HTML pages that they point to:

import requests from bs4 import BeautifulSoup url_queue = [] response = requests.get("https://nytimes.com") soup = BeautifulSoup(response.text, "html.parser") sections = soup.find_all("section", class_="story-wrapper") for section in sections: url = section.a["href"] url_queue.append(url)

Now that we're added all the URLs from the story links on this page into a queue, how should we go about accessing them? Let's reorganize our code a little bit. We have not use functions very much this semester. Functions are a way to take some snippet of algorithm, give it a name, and make it reusable. Sometimes functions are called subrounties, procedures or methods. (Each of these terms has a slightly different meaning, but they are broadly speaking the same idea.)

To move forward here, it will be useful to have our code in a subroutine, so let's do that, using the Python syntax for this:

import requests from bs4 import BeautifulSoup url_queue = [] # Define the function: def process_next_url(): response = requests.get("https://nytimes.com") soup = BeautifulSoup(response.text, "html.parser") sections = soup.find_all("section", class_="story-wrapper") for section in sections: url = section.a["href"] url_queue.append(url) # Call the function: process_next_url()

Here I have just moved all the code into a function

called process_next_url, defined with

the def keyword. And I am calling this function

once, a few lines later. Pay careful attention to the

indentation here. A function introduces a new

code block (as indicated by the

colon :, which means that everything must be

indented to be considered "in" that block.

However, nothing has changed in terms of behavior. Every time I

call this function it does the exact same thing. Since I am

adding new URLs to the url_queue list, what I would

like to do is be able to call this function, and each time I

call it, it processes the next URL in the list. That would look

like this:

import requests from bs4 import BeautifulSoup url_queue = [] url_queue.append("https://nytimes.com") # Define the function: def process_next_url(): next_url = url_queue.pop(0) response = requests.get(next_url) soup = BeautifulSoup(response.text, "html.parser") sections = soup.find_all("section", class_="story-wrapper") for section in sections: url = section.a["href"] url_queue.append(url) # Call the function process_next_url()Now, I start by adding

"https://nytimes.com" to my

queue, and inside process_next_url, I'm

calling pop() but passing an

argument: 0. This says to remove the first element

from the list. So my algorithm is:

- Adding my first URL to the queue

- Call my function

- My function processes that URL and adds more URLs to the queue

Example 1: Implementing a URL-processing queue with a list

import requests

from bs4 import BeautifulSoup

url_queue = []

url_queue.append("https://nytimes.com")

# Define the function:

def process_next_url():

next_url = url_queue.pop(0)

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

sections = soup.find_all("section", class_="story-wrapper")

for section in sections:

url = section.a["href"]

url_queue.append(url)

# Call the function

while len(url_queue) > 0:

process_next_url()

What we have just implemented here is a different data structure called a queue. As you can see, queues can be implemented using a list, so it isn't entirely a different data structure, strictly speaking. But it is a kind of usage pattern, for one way you might use a list. Adding things on to the end, and removing things from the beginning. This is called a FIFO queue, or, "first in, first out".

Probably every page on the New York Times website

includes at least more than one link to other URLs. So, this

algorithm will keep adding more URls onto the queue faster than

it can process them. In other words, the queue will keep getting

longer, will never be emptied, and the while loop

will never end. Let's add some logic to end this loop. But

first, let's do something a little more interesting with these

pages.

Mapping connections

Now that we're processing all these pages, let's try to stuff them into a data structure in a way that will help us make a sitemap.

The idea here is that I want to save each web page that we process. We can save a bit of information about each one, like say, it's title. And let's also save a list of links that this page points to. What data structure(s) should we use here?

Let's use a dictionary to store our web pages, with the URLs as keys. Each key can point to another dictionary comprised of the page title, and a list of URLs that this page points to.

Putting that all together:

import requests

from bs4 import BeautifulSoup

url_queue = []

url_queue.append("https://nytimes.com")

pages = {}

# Define the function:

def process_next_url():

next_url = url_queue.pop(0)

# If we have already processed this URL don't process again,

# Call return to end this subroutine

if next_url in pages:

return

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

sections = soup.find_all("section", class_="story-wrapper")

linked_pages = []

for section in sections:

url = section.a["href"]

url_queue.append(url)

linked_pages.append(url)

title = soup.title.string

pages[next_url] = { "title": title, "linked_pages": linked_pages }

# Call the function

while len(url_queue) > 0:

process_next_url()

Now maybe we can use pages as some criteria to stop

after a while. For now, let's say we'll stop after we've

processed 10 pages.

import requests

from bs4 import BeautifulSoup

url_queue = []

url_queue.append("https://nytimes.com")

pages = {}

# Define the function:

def process_next_url():

next_url = url_queue.pop(0)

# If we have already processed this URL don't process again,

# Call return to end this subroutine

if next_url in pages:

return

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

sections = soup.find_all("section", class_="story-wrapper")

linked_pages = []

for section in sections:

url = section.a["href"]

url_queue.append(url)

linked_pages.append(url)

title = soup.title.string

pages[next_url] = { "title": title, "linked_pages": linked_pages }

# Call the function

while len(url_queue) > 0 and len(pages) < 10:

process_next_url()

If you'd like, at this point, you can add one last line here that

calls print(pages) to print out the data structure you

just made.

Visualizing

The data structure that we assembled here to keep track of pages and their linkages could do really well as the basis of a network diagram. In this case, that kind of diagram would serve as a kind of automatically-generated sitemap.

This type of visualization is generally called a network diagram, or a graph. There is a library called NetworkX which adds shortcuts to help you build and work with these types of network diagrams. They can be used to visualize social networks, web sites full of pages, or any other collection of associations.

We could try to visualize this using two additional libraries. Install them with the following commands:

$ pip install networkx $ pip install matplotlib

And add imports for them at the top of your file:

import networkx as nx import matplotlib.pyplot as plt

Have a look at the documentation for NetworkX library. As is explained there, building up a network diagram, or graph, is not too complicated, and this is partly because our data structure is so well-suited to the task. It's as simple as this:

G = nx.Graph()

for key in pages:

for link in pages[key]["linked_pages"]:

G.add_edge(key,link)

Loop over the URLs (i.e., the keys of the dictionary), and for

each URL (for each key), access the list of linked pages for

that URL. For each linked page, add that pair (the URL for the

key, and the URL from the list) to the graph. In the language

of graphs, a link is called an edge.

And the commands to then visualize that aren't too complicated either:

nx.draw(G, with_labels=True, font_weight='bold') plt.show()

Putting that all together, the code ends up looking like this. I had to add in some extra error checking, shown below in blue:

Example 2. Putting it all together.

import requests

from bs4 import BeautifulSoup

import networkx as nx

import matplotlib.pyplot as plt

url_queue = []

url_queue.append("https://nytimes.com")

pages = {}

# Define the function:

def process_next_url():

next_url = url_queue.pop(0)

# If we have already processed this URL, don't process again

if next_url in pages:

return

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

sections = soup.find_all("section", class_="story-wrapper")

if len(sections) == 0:

# then we're processing a page that's not the front

sections = soup.find_all("section",attrs={"name": "articleBody"})

linked_pages = []

for section in sections:

url = section.a["href"]

url_queue.append(url)

linked_pages.append(url)

try:

title = soup.title.string

except:

title = "blank"

pages[next_url] = { "title": title, "linked_pages": linked_pages }

# Call the function

while len(url_queue) > 0 and len(pages) < 30:

process_next_url()

# Visualize

print("Visualizing now")

G = nx.Graph()

for key in pages:

for link in pages[key]["linked_pages"]:

G.add_edge(key,link)

nx.draw(G, with_labels=True, font_weight='bold')

plt.show()

I'm asking you to work with this concept

in the homework for this

week.

Have fun!