Code as a Liberal Art, Spring 2024

Unit 2, Lesson 3 — Thursday, March 28

A Markov chain algorithm

Today we're going to implement a Markov Chain algorithm to generate language designed to emulate existing text. This algorithm works by analyzing a large body of text called a corpus. It builds a data structure to represent that corpus as a collection of words, and for each, a list of words that are statistically likely to follow that word. Then we can give the algorithm a word to start with and ask it to generate a series of words likely to follow it.

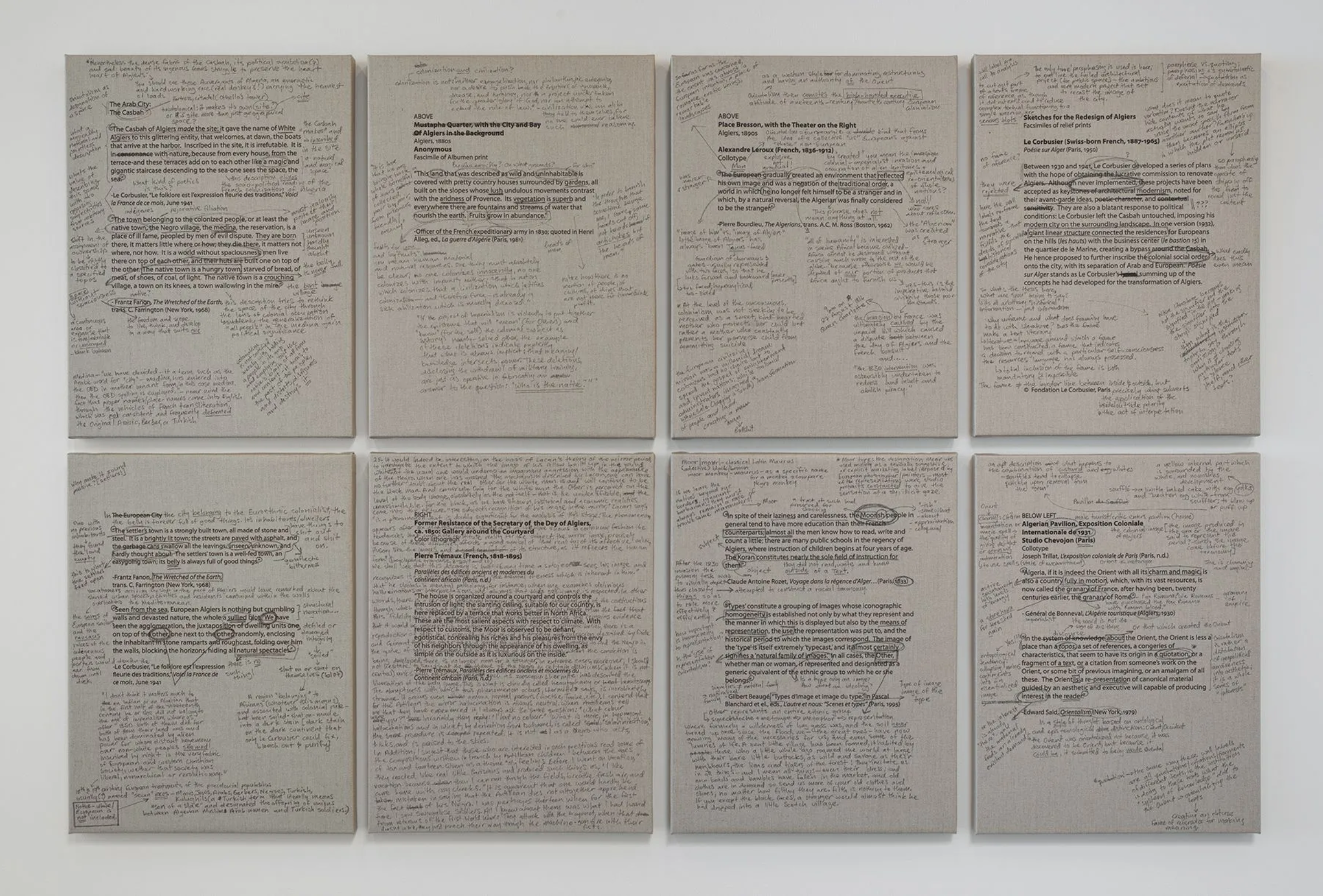

Meleko Mokgosi, "Wall of Casbah (2009 – 2014)". In this piece

the artist offers critical commentary about art and the museum

system by annotating the wall placards accompanying an

exhibition called "Walls of Algiers" at the Getty

Center. Read more

here or click to

enlarge.

Meleko Mokgosi, "Wall of Casbah (2009 – 2014)". In this piece

the artist offers critical commentary about art and the museum

system by annotating the wall placards accompanying an

exhibition called "Walls of Algiers" at the Getty

Center. Read more

here or click to

enlarge.

- Markov chain & Markov process explanation

- Markov chain data structure implementation

- An alternative data structure approach

- Wrapping up

I. Markov process & Markov chain explanation

A Markov process is a type of algorithm that starts with a given state, and determines which state to go to next, based on some probability. A state here can be many different types of things: a step of a calculation or data processing procedure, a weather pattern, a step in a biological process, a musical note, or many other things. Markov processes have a wide range of applications for modeling different kinds of phenomena. In our case, the states will be words, and we'll be using a Markov process to generate text that we will try to get to mimic natural, human language.

A Markov process depends upon a specific data structure known as a Markov chain. A Markov chain stores all the various states that a system can be in, and for each state, it stores a list of possible next states, with a probability for each that represents the likelihood of choosing that state.

In our case, since we'll be using a Markov process to generate text, and the states of our data structure will be words, for each word we will store a list of possible next words, and for each "next word" we will find some way to represent a probability for the likelihood that this next word would be chosen. We will generate all the words by reading a corpus, or a large body of text, which can be a single text file or multiple text files. For each word in our corpus, we will build up a list of all words that come after it, with the likelihood that it comes next in this body of text. Then we will implement a Markov process algorithm that uses this data structure to start with a word, makes a randomized choice to select the next word based on the probabilities that we've determined, and then repeats the process to generate sentences.

To recap, this type of project involves two main steps: (1) write the code that analyzes a body of text and generates a data structure to represent a Markov chain, and (2) implement a Markov process algorithm that uses this data structure to generate text.

The goal is to generate new text that reads like it could plausibly be from the source text that we are starting with.

(jump back up to table of contents)II. Markov chain data structure implementation

Let's start by processing a text and generating a Markov chain data structure.

For the below work, I will be using this very simple test case:

I like apples. I like oranges. Apples are red or green. Oranges are orange. Red, green, and orange are colors.You can download it here: unit2lesson3_simple.txt

This data structure should look something like this:

{ 'I': ['like', 'like'],

'like': ['apples.', 'oranges.'],

'apples.': ['I'],

'oranges.': ['Apples'],

...

}

Here we have a dictionary for which the keys are each word in

the text, and for each key, the associated value will be a list

of "next words", by which we mean every word that comes after

this word in the text.

With this data structure, we could use the Python

random.choice() function to choose an item from the

list of "next words", and statistically the choice would yield a

random word with the same probability that it appears in the

text.

To implement this let's start by opening the text file and

making a new empty dictionary called markov.

file = open("unit2lesson3_simple.txt","r")

markov = {}

import pprint

file = open("unit2lesson3_simple.txt","r")

markov = {}

words = file.read().replace("\n"," ").split()

for (i,word) in enumerate(words):

if i == len(words) - 1:

break

next_word = words[i+1]

if word in markov:

markov[word].append(next_word)

else:

markov[word] = [next_word]

# print(markov)

pprint.pp(markov)

(jump back up to table of contents)

III. An alternative data structure approach

A slightly different way that we might implement a Markov chain data structure slightly to look like this:

{ 'I': { 'like': 2} ,

'like': { 'apples.': 1, 'oranges.': 1 },

'apples.': { 'I': 1 },

'oranges.': { 'Apples': 1 },

...

}

A dictionary for which the keys are words in the text, and the values are a dictionary of "next words", by which we mean every word that comes after this word in the text. We represent each "next word" in our list as a key/value pair in which the key is the "next word", and the value is the number of times that it appears after this word in the text.

The example output here would be the data structure for the same example as my pseudocode above.

In the first version above, we're storing each "next word" in a list, with repetitions. While in the second version above, we're storing each "next word" as a dictionary of the word and its word count.

There are definitely some advantages and disadvantages to each approach! For one it helps illustrate the concept of time/space tradeoff, a common topic within computer science.

If you'd like to work with a longer body of text, you might try something like Charles Dickens' David Copperfield, which I downloaded from archive.org. You can download the David Copperfield text here.

For your homework this week, I'd like you to get the above code running for a sample corpus and see what the results look like. Use the same sample corpus and see if you can get results that look right.