Code Toolkit: Python, Fall 2020

Week 14 — Wednesday, December 1 — Class notes

Table of contents

(The following links jump down to the various topics in these notes.)

- Network protocol

- Understanding the HTTP protocol by invoking it manually

- More experimentation with HTTP

- Network connections in Python

- Completing the networked example from last week

- Your own web server

- Running Python code in a webserver

I. Network protocol

Networks are comprised of protocols. In class, we had a discussion of the text on protocol by Alex Galloway and Eugene Thacker.

Requests for comment (RFCs) specify the rules of the various protocols and open standards that comprise the internet.

Some examples:

HTTP: RFC 2068

RFC 7230, RFC 7230, section 2.1

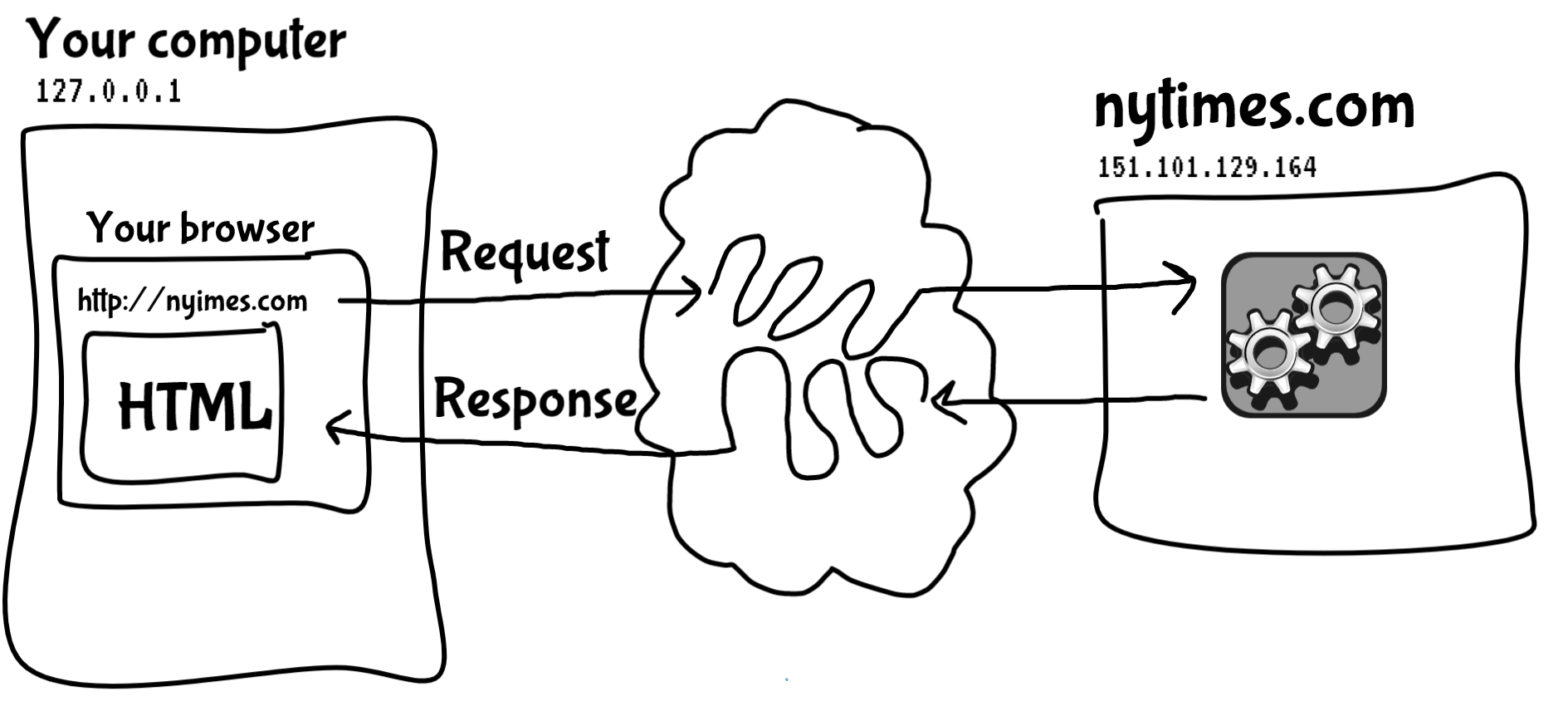

The most basic aspect of the HTTP protocol is that the client opens a connection to a server and sends a request in the form of some text, then the server replies with a response which contains the data content that the client requested.

II. Understanding the HTTP protocol by invoking it manually

Normally, when you type an address into your web browser, the

browser software does all of these steps, automatically

connecting to a server, issuing a request for a webpage,

receiving the server's response, and displaying that to you.

But we can experiment with this in a very low-level way using the

command line with a command called nc,

which stands for "net cat".

Sidenote. The name "net cat" comes from the fact thatncwas designed as a networked version of an older Unix command calledcat. Thecatcommand gets its name because its purpose is to concatenate files together. You can use it like this:$ cat file1.txt file2.txt > new-file.txtwhich will take the contents offile1.txtandfile2.txt, smash them together, and put all of that intonew-file.txt. Try it with some short plain text files to see what you get. You can always read more about any Unix commands by typemanand the command name, which is meant to signify "manual page". For example:man catwill give you the full documentation for thecatcommand.

The nc command takes two arguments: a

server name, and a port number. Any given

server listens for requests on multiple ports. Kind of like how

a train station might have multiple tracks coming in, or an

address may have multiple mailboxes. Every protocol has a

standard port that it is associated with. HTTP

is usually on port 80 as a convention. HTTP can operate on a

different port, but this is non-standard so any client would

need to try HTTP on that other port number.

$ nc google.com 80 GET / HTTP/1.1 Host: google.com

Conclude the connection by pressing ENTER again to send an empty line. For me, this request gives the following response:

HTTP/1.1 200 OK Date: Wed, 01 Dec 2021 08:36:29 GMT Expires: -1 Cache-Control: private, max-age=0 Content-Type: text/html; charset=ISO-8859-1

The first line includes 200 OK, which

is what HTTP calls a status code. You can read

the full list of HTTP status codes

in RFC

1945

in section

6.1. Other common status codes include 30x

(redirect), or 40x (error).

After that we see several lines like Expires:

-1. These are called HTTP headers. They

are like meta-data about the response. Not the content that you

requested (the webpage) but rather data about that page or about

the server's response.

For instance, if I use nc to connected

to newschool.edu, I get a response that

includes this:

Date: Wed, 01 Dec 2021 15:00:00 GMT Content-Type: text/html; charset=UTF-8 Content-Length: 10500 Connection: keep-alive Set-Cookie: AWSALB=Jrf9u1aK2gBjFMHl8Te6ZmTVmqzO4UlMgsqSWmlJwdw8bS4wua/QkXLmsdIiQ+3Lm4VVaD+by18vKVXayHk03Z1CIPf5Deu6LCPZECLp4WoBm8rx44d/ltzkx4dC; Expires=Wed, 08 Dec 2021 08:37:18 GMT; Path=/ Set-Cookie: AWSALBCORS=Jrf9u1aK2gBjFMHl8Te6ZmTVmqzO4UlMgsqSWmlJwdw8bS4wua/QkXLmsdIiQ+3Lm4VVaD+by18vKVXayHk03Z1CIPf5Deu6LCPZECLp4WoBm8rx44d/ltzkx4dC; Expires=Wed, 08 Dec 2021 08:37:18 GMT; Path=/; SameSite=None Location: https://www.newschool.edu/ Server: Microsoft-IIS/8.5 X-Powered-By: ASP.NET

By looking through these headers you can find out a lot of information about the server you are connecting to and how it is operating. In this case you can see: the size of the data that the server is returning (10500 bytes, which is 10500 characters), the web server software (a Microsoft webserver called IIS), that the server is trying to set some cookies into my browswer, and some other things.

After the header section, you should see a large chunk of text that might look familiar to you if you've ever done any HTML coding.

Going back to google.com, the response

I received included this:

<!doctype html><html itemscope="" itemtype="http://schema.org/WebPage" lang="en"><head> <meta content="Search the world's information, including webpages, images, videos and more. Google has many special features to help you find exactly what you're looking for." name="description"><meta content="noodp" name="robots"><meta content="text/html; charset=UTF-8" http-equiv="Content-Type"> <meta content="/logos/doodles/2021/seasonal-holidays-2021-6753651837109324-6752733080595605-cst.gif" itemprop="image"><meta content="Seasonal Holidays 2021" property="twitter:title"><meta content="Seasonal Holidays 2021 #GoogleDoodle" property="twitter:description"><meta content="Seasonal Holidays 2021 #GoogleDoodle" property="og:description"><meta content="summary_large_image" property="twitter:card"><meta content="@GoogleDoodles" property="twitter:site"><meta content="https://www.google.com/logos/doodles/2021/seasonal-holidays-2021-6753651837109324-2xa.gif" property="twitter:image"><meta content="https://www.google.com/logos/doodles/2021/seasonal-holidays-2021-6753651837109324-2xa.gif" property="og:image">

Again, if you're familiar with HTML you might be able to see

some HTML tags in there, such as <doctype>, <html>,

<head>, and <meta>. You

might also recognize that Google is currently somehow specifying

its current "doodle" logo image as a holiday

image:

https://www.google.com/logos/doodles/2021/seasonal-holidays-2021-6753651837109324-2xa.gif(You can visit that URL to see what it looks like.)

III. More experiments with HTTP

Try this for different websites. What status

codes do you get in response?

If you get a response with status code 301

Moved Permanently and a Location

header, that means that you have requested a page which now

resides at a new location. You might try requesting that new

location.

For example, in the google.com example

above, I get a 301

and Location:

http://www.google.com/. This is because Google is trying

to redirect me to the full

url www.google.com instead

of google.com. If I try the request

again with the full URL, I get a 200 OK

with the content I showed above.

However, if you get a 301 and

the Location header

includes https, this means that the

site you're trying to access only accepts encrypted

requests. Trying to send and receive encrypted requests and

responses is too difficult (in my opinion) to try doing manually

via the command line in this way.

If you immediately get a 400 Bad

Request, it is likely because of some command line

incompatibility. Instead of pressing ENTER at the end of the

line, for each line you can try typing CONTROL-v, then ENTER,

then ENTER again. I encountered this issue for example trying to

access classes.codeatlang.com. (More

info & explanation here.)

IV. Network connections in Python

This is tedious! Fortunately, there are many other tools we can use to work with network connections. As I mentioned, your web browser handles all of this for you (we say that the browser implements the HTTP protocol).

If you want to write a computer program that handles network connections, Python has libraries that will handle much of this for you.

Network connections in Python (as in most programming languages)

work in a very similar way to the

input() command, or the way that you

can open() a file and write text

to it. In this case though, the "file" that you are opening and

writing to is not an actual file to be saved on your computer,

but rather a link that sends the text you write to a different

computer which is synchronously reading whatever you write.

In most programming languages (Python included) these basic

network connections are called sockets. Here is

a Python interactive shell example that uses

a socket connection to replicate the behavior

of nc:

>>> import socket

>>> s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

>>> s.connect(("google.com", 80))

>>> s.sendall(b'GET / HTTP/1.1\r\nHost: google.com\r\n\r\n')

>>> data = s.recv(1024)

>>> data

b'HTTP/1.1 301 Moved Permanently\r\nLocation: http://www.google.com/\r\nContent-Type: text/html; charset=UTF-8\r\nDate: Wed, 01 Dec 2021 11:21:38 GMT\r\nExpires: Fri, 31 Dec 2021 11:21:38 GMT\r\nCache-Control: public, max-age=2592000\r\nServer: gws\r\nContent-Length: 219\r\nX-XSS-Protection: 0\r\nX-Frame-Options: SAMEORIGIN\r\n\r\n<HTML><HEAD><meta http-equiv="content-type" content="text/html;charset=utf-8">\n<TITLE>301 Moved</TITLE></HEAD><BODY>\n<H1>301 Moved</H1>\nThe document has moved\n<A HREF="http://www.google.com/">here</A>.\r\n</BODY></HTML>\r\n'

This is almost just as tedious as using

the nc on the command line! This is

true. Using a socket connection in Python in

this way could at least be automated, but it is still

difficult to use because you as the programmer have to think

about so many details. Fortunately there are easier ways.

The library that is probably the best and easiest to use for

making HTTP requests is called,

appropriately, requests. The project

homepage and documentation are available

at docs.python-requests.org. Sadly,

this only works for Python 3, but Python Processing currently

only supports up to Python 2.

Since we'll be working with Processing code as our network

client, and Processing only supports Python version 2, we'll

have to use a different library

called urllib. The official

documentation is available here. As that explains:

"the urlopen() function is similar to the built-in

function open()" which we've used the last two

weeks, "but accepts Universal Resource Locators (URLs) instead

of filenames," but "it can only open URLs for reading."

V. Networked example from last week

Let's continue with the example from last week.

Create a new Processing sketch. Download copy this code and paste it into the main tab:

week13_networked_example_part5.pyde

Modify myCreature["name"] so it is a unique

string. Your name or whatever you'd

like. Modify myCreature["color"] to color values

you'd like to use.

Next, create a new tab called week13_networking and

copy/paste this code into it:

Modify ip_address and port to be the

values of the server that we're using today, which

are 174.138.45.118 and 5000. (Note the

quotes even though these are numbers.)

As last week's class notes explain,

the code in this second tab defines two

functions: getData(cList)

and sendData(c).

-

getData()retrieves a JSON file from a webserver with the given IP address, then it populates a list of dictionaries, and returns that list. You would use it like this:creatureList = getData()

-

sendData(c)sends the data about one single "creature" to this webserver. This webserver has its own Python code which saves this data into a list and distributes it to anyone who callsgetData(cList).

Run this code and see what you get. If my server is up and running, you should see your own "creature" moving around at your command, as well as creatures for everyone else connecting to the server at this moment.

If you would like to see what the webserver code looks like, you can have a look here: week13-web-server.py. This is a relatively short amount of code! This looks simple, but it is using a lot of things that we have not talked about yet. Mainly, this is using a Python web server library called Flask, which you can read about if you are curious.

How does this work? Well it uses query

parameters to send JSON data about the color, size, and

position of your creature to a server. The server then receives

this data from everyone who is running this client, compiles it

together, and sends that list of all creature data to all

users. Notice lines 35-40. They are compiling a query

string of variable names and values and attaching them

to the URL. This might look familiar to you if you've ever

scrutinized a URL before. Query parameters are

attached onto the end of a URL with a ?, and

multiple parameters are separated

by &. Whenever you see this puncuation pattern

in a URL, you can see what data is being sent to the

server. Have a look at this YouTube URL for

example: https://www.youtube.com/watch?v=T2kwz_8e0os&t=9s. Here

the v is the video ID, and the t

indicates the time in seconds at which to start the video.

You can setup your own webserver to receive requests with parameters, but first we have to see how to run a basic webserver, and how to create a Python program that will listen for requests and respond to them.

VI. Your own web server

How can you set up your own Python web server to listen for requests and offer responses in return?

Python actually comes bundled with a very simple webserver

library that implements HTTP,

called http.server. (Documentation

here.) Using this, we can experiment with running our own

very simple webserver running locally on our computers.

First, make a new directory (folder) in Finder (or Explorer),

create a new file in Atom, add some simple HTML code, and save

it into this new folder as hello.html. You can use

this basic HTML:

<html>

<body>

<h1>Hello!</h1>

<p>This is some basic HTML</p>

</body>

</html>

Now, cd in to this new directory, and

type the following command:

$ python -m http.server 8000What this does is run a simple webserver, locally on your machine, using port

8000. In the terminal, you are now viewing

a log of all the requests that the server receives, and messages

about how it is handling responses to them. Open up a browser and

try visiting this local webserver. The IP address of your local

computer is always 127.0.0.1, and remember that we

are using port 8000, which you specify with a colon,

so type this in to your browser: 127.0.0.1:8000. You

should see something happening in the log, and your browser should

show you a list of the contents of this directory. If you click

on hello.html, you should now see your HTML file

displayed.

When you are ready to exit out of this, you can type control-C

to stop the webserver and get you back to the command line. Try

adding additional files and subfolders to the folder of this

simple webserver and re-running the server. As you click around

to HTML files in your browser, note how the URLs of these pages

correspond to the folder and subfolder structure that you

create. This is the principle behind how all websites and URLs

are structured: the IP address and port refer to a computer

program (a server) running on a computer somewhere, and the URL

with all of its slashes (/) refers to folders and

subfolders which that server program can see.

If you're comfortable with an oversharing experiment, try to find your computer's IP address:

$ ifconfig

... lots of output! ...

en0: flags=8863<UP,BROADCAST,SMART,RUNNING,SIMPLEX,MULTICAST> mtu 1500

options=400<CHANNEL_IO>

ether 3c:22:fb:d4:dc:62

inet6 fe80::101d:663d:5483:8667%en0 prefixlen 64 secured scopeid 0x6

inet 192.168.0.190 netmask 0xffffff00 broadcast 192.168.0.255

nd6 options=201<PERFORMNUD,DAD>

media: autoselect

status: active

... more output ...

Look for en0 and the filed that

says inet. That is your computer's IP

address. Write it down or copy/paste it somewhere.

Now cd in to your home directory

(cd ~) or your downloads directory, and

type the command above to run a Python webserver on your local

machine. Now share your IP addresses with each other and let's

browse the files in this directory of each other's computers! If

you're not comfortable sharing all this, you

can cd into your folder for this class,

or make a folder with nothing in it for this experiment.

VII. Running Python code in a webserver

OK, well that is nice and fun, but what if you want a webserver that does more than just return static HTML pages to a user. What if you want a webserver that dynamically generates content using all that you have learned about coding, and returns the output of a program to the web user?

For this, we can use a technique called CGI, which stands for common gateway interface. This is a technique where a server receives an HTTP request , but instead of simply returning an HTML file, the server executes a computer program, and the output of that program is returned as the HTTP response. It is similar to running a Python program on the command line, but instead of typing a command to execute, a user on the web requests a URL with their browser, which then invokes the program, and the browser then displays the response. In a way, it is also somewhat similar to all the work that we have been doing in Processing, except instead of interactive keyboard and mouse input, the only inputs come from the request, and instead of displaying visual graphic results, you can only return textual content in the web response.

Let's experiment with this ...

Create a subfolder called cgi-bin. Within that

subfolder, create a new file called server.py, and

open that in Atom.

cd to this new folder, and make sure

that your server.py file

is executable. You can check this by

typing the following:

$ ls -l cgi-binIt probably is not executable by default, so to make sure that it is, type the following command:

$ chmod a+x cgi-bin/server.py(This command is short for "change mode" and it allows you to change read, write, and execute permissions on files.)

The first line of this file should be the executable path to Python on your system. Type:

$ which python /usr/local/bin/pythonFor me, that outputs

/usr/local/bin/python, but

for you it may be different. Whatever it says, copy that

output, and paste it in as the first line

of server.py, preceded by a pound sign

(#), like this:

#!/usr/local/bin/pythonNow you can add any arbitrary Python code into this program. The first thing you must do to follow the HTTP protocol is return the following text:

server.py:

print("Content-Type: text/html")

print("")

After that, try adding some other Python commands. For example

for now you could simply add:

print("Hello, world!")

Now try running an HTTP server in this directory. Now we have to add a command line parameter to indicate that we are also trying to run CGI scripts. But otherwise the command is the same.

$ python -m http.server --cgi 8000

And now in your browser try visiting: 127.0.0.1:8000/cgi-bin/server.py