Coding Natural Language, Fall 2021, Week 7

Week 8 — Wednesday, October 20 — Class notes

Working with the Natural Language Toolkit (NLTK)

Let's get started working with some more interesting Natural Language Processing (NLP) techniques using NLTK.

Plan for the day

(Click each to jump down to that section.)

- The NLTK Book

- Working with texts, from NLTK or elsewhere

- Workspace setup

- Python in a file versus the interactive shell

- Some introductory NLTK techniques

- Homework

I. The NLTK book

The functionality that I'll be showing you below is largely drawn from The NLTK Book (available online here), and in particular the first chapter. I'll be sharing some lessons from these chapters, and I encourage you also if you are curious to explore that book and find other lessons that might be interesting to you.

II. Working with texts, from NLTK or elsewhere

NLTK is kind of two things put together: a collection of NLP functionality, as well as a collection of texts (a.k.a., corpora, the plural of corpus). In other words, like most Python libraries, NLTK comes with a bunch of programming commands that you can use in your programs after you import the library into a program, but unlike most other Python libraries, NLTK also comes with data for you to experiment with. These mainly consist of various freely available texts from various sources such as Project Guttenberg (a "volunteer effort to digitize and archive cultural works", wikipedia).

Today and for your homework this week, I'd like you to experiment with your own text, and I'll show you how to bring that in to Python to work with in NLTK.

If you are looking for freely available textual material online, I can recommend archive.org. Archive.org is probably most famous for The Wayback Machine, which lets you access any historical web page, even if it is currently no longer available online. But in addition to this, Archive.org is, well, an archive: host to a vast trove of multimedia material. If you visit the site, click the book icon, and click All texts, you will be brought to a page containing all their collections of texts to access. Some of these (like commercially available books) are often only available temporarily, on a "borrow" model, as if you were getting the book from a library. Other freely available texts are open for you to download.

I clicked around here, looking for something interesting, and found the National Security Internet Archive, and within that a collection called the FBI Files, and within that, a bundle of documents all about the Watergate scandal involving Richard Nixon in the mid-1970s.

I've provided one document from that for us to work with today, but encourage you to look around and find something interesting to you. When you find something, make sure that under "Download Options", you click on "Full text" and download a .txt file. The other formats (such as Kindle or PDF) are going to be much harder to access from Python and NLTK.

The file I'll be working with is available here: Watergate Summary_djvu.txt

III. Workspace setup

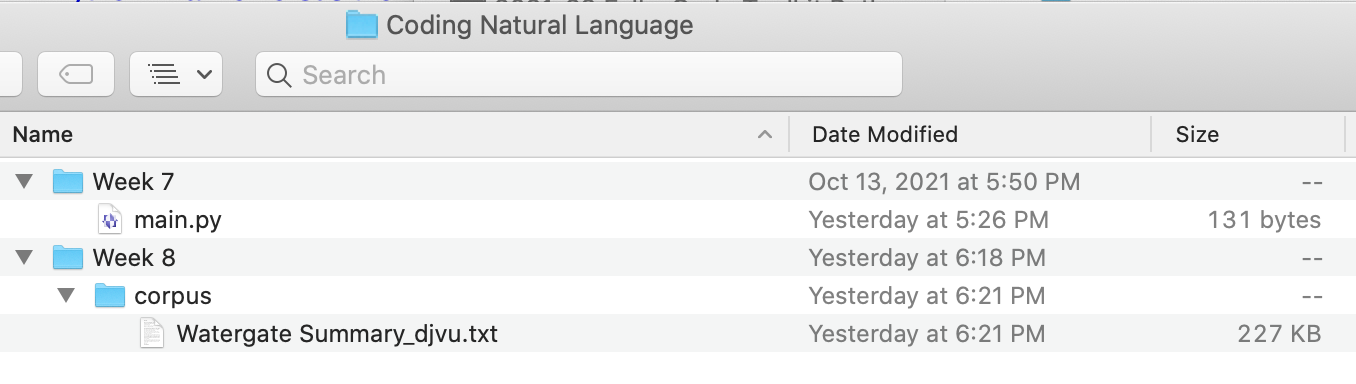

Make a folder called Week 8, in it,

create a subfolder called corpus. Save

a text file into the corpus folder

(whether it is the above text file or one of your own). Your

folder for our class should now look like this:

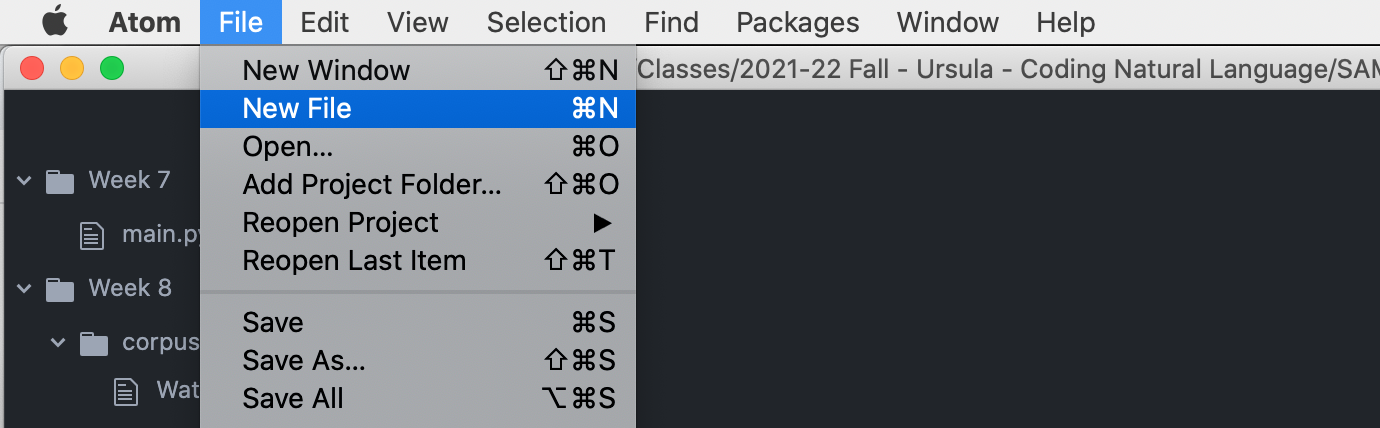

Open Atom, from the menu click File > New File

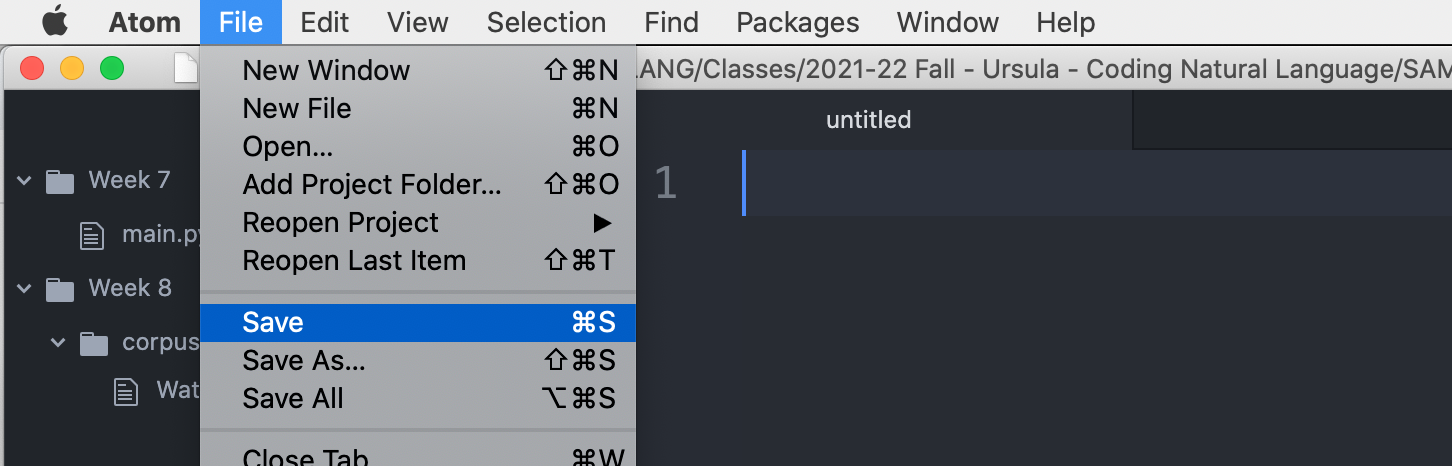

Then, from the menu click File > Save

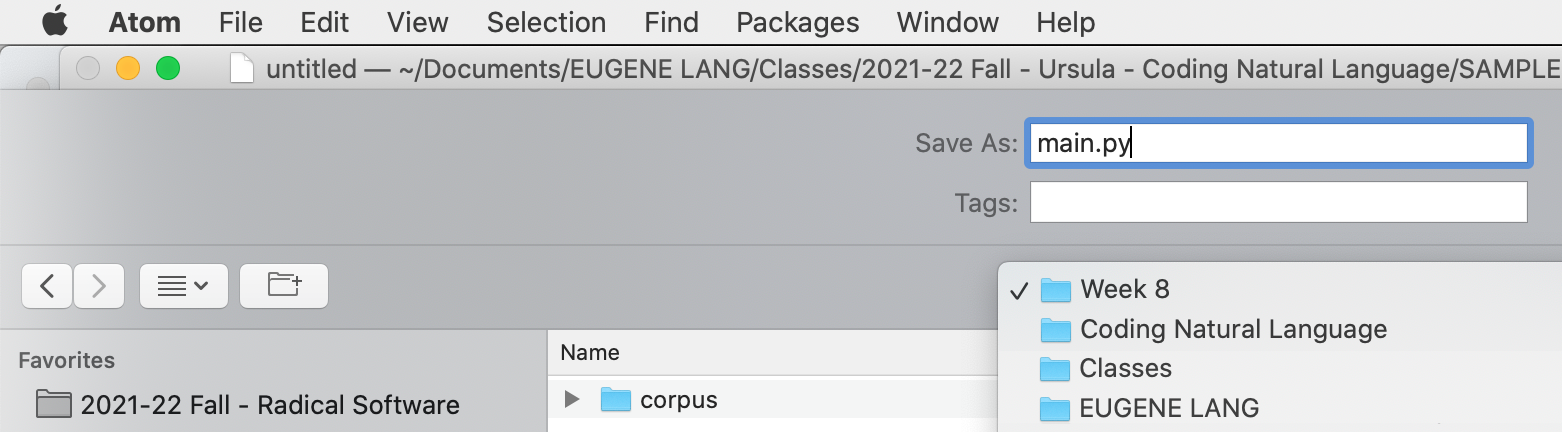

Then navigate to your Week 8 folder,

name your file main.py, and click

"Save".

Now add the following lines to main.py

and Save the file:

import nltk

print("hello")

IV. Python in a file versus the interactive shell

There are two main ways of working with Python and running Python code: in a file, and in the interactive shell.

(a) Python in a file

The more common method is to put a bunch of Python commands into

a file that ends with the .py

extension, saving that file, and running that file from the

command line. You can edit any .py file

in Atom. Once you save it, you can flip over to the command line

and run the file with the python3

command (or python if you are using

Python v2, but I don't think anyone in class is doing that).

$ cd Week8 $ pwd /Users/rory/Documents/2021-22-Fall-Coding-Natural-Language/Week8 $ ls corpus main.py $ python3 main.py Hello

A note on style: When you see commands that are

formatted in this style (black background, gray line on top,

rounded corners) I am signifying to you that these are a valid

commands to enter into the command line. The dollar sign

($) is meant to signify the command

prompt - you should not type it. Any text that I include here

that does not begin with the $

command prompt is meant to signify sample output that you might

see in response. Your output may be slightly different based on

your system, where you have your folders located, and so on.

You do not need to type cd,

pwd,

or ls every time you want to

run a Python program. I include those above for

demonstration. But you do need to make sure that your

command line is in the same folder

(a.k.a. directory) that contains

your .py Python code file. You can use

the ls command (which means "list") to

verify that the .py file is in your

current directory. If not, you need to

use cd to change into the directory

that contains your code file. See last

week's notes for some explanation on how to do that (i.e.,

the drag-and-drop trick).

(b) The interactive Python shell

The second way to run Python commands is in interactive mode, in which case you type Python commands one-by-one, pressing enter each time, and Python will evaluate the command and give you a result. This technique is useful for testing or debugging small bits of code.

To do this, type python3 at the command

line:

$ python3 Python 3.8.3 (v3.8.3:6f8c8320e9, May 13 2020, 16:29:34) [Clang 6.0 (clang-600.0.57)] on darwin Type "help", "copyright", "credits" or "license" for more information. >>>

This is called the Python shell. It is similar

to the normal command line in that there is a prompt and you

type commands, but the commands available to you here are

completely different: they are Python commands. Note the

different command prompt (>>>),

which signifies that you are in the Python shell.

To exit out of the Python shell,

type exit() or press

<CONTROL>-d. That will bring you back to the regular command

line shell.

V. Some introductory NLTK techniques

Now, let's experiment with some introductory NLTK techniques from chapter 1 of The NLTK Book.

(a) In the interactive shell

First let's do this in an interactive Python shell. Later we'll

put it all in a file. To start, make sure you are in the Python

shell (look for the >>>

prompt, and if you don't have that,

type python3 and press enter). Now type

the following:

>>> import nltk

>>> f = open("corpus/Watergate Summary_djvu.txt")

You shouldn't see any output yet. The open()

command opens a file so that you can read or write to it from

within your Python code.

If you get a

"FileNotFoundError", that means that

you are not in the directory that contains

your corpus folder. See above for

comments about what your folder structure should look like and

how to cd into the right place.

Next:

>>> file_contents = f.read()

The read() command reads the contents of a file as

a string (programming jargon for a chunk of

text) and saves that text into a variable. Here, that variable

is called file_contents, but this is an arbitrary

variable name. You can use whatever you'd like. Next:

>>> tokens = nltk.word_tokenize(file_contents)

>>> tokens[0:10]

['-', '¥', '\\', ')', 'FEDERAL', 'BUREAU', 'OF', 'INVESTIGATION', 'WATERGATE', '(']

The word_tokenize() command takes

a string of text (in this case, the contents of

the file_contents variable)

and parses that into smaller atomic units,

called tokens. NLTK has many different types

of tokenizers. In this case, we are using the

default tokenizer for dividing up a chunk of text into words,

based on things that are separated by spaces and punctuation.

In the Python shell, you can display the value of a variable

simply by typing its name. In this case above, the second line

is showing you the value of the tokens

variable. However, since this is a large text file, the number

of tokens would be very large! So the bracket

notation here ([0:10]) displays only the

first 10 tokens in the file. If you see some junk like I did, it

is probably just artifacts or garbage from an imperfect OCR

(optical character recognition) scan.

Next, let's do something with the tokens:

>>> text = nltk.Text(tokens)

>>> text.concordance("criminal")

The first line creates a Text file object, which is

an object that NLTK uses to process texts. The second command

does a concordance analysis on one particular

word, in this case "criminal". It shows us every instance of the

word in the file and gives some context. Try some different

words and see what you find.

Next:

>>> text.similar("criminal")

The similar() command tries to find similar words -

i.e., words that exist in similar contexts.

And lastly:

>>> text.common_contexts(["criminal","exact"])

This command tries to find common contexts between these two words - pairs of words that both of the above words exist in between. Experiment to look for some others.

You can find thorough explanation for all of these commands and

their parameters on the NLTK documentation page for

the Text

module.

(b) In a Python file

Now that we have experimented with some NLTK techniques in the shell, let's move all this code into a Python file, so that you can easily run it later, without typing each command one-by-one.

Back in Atom, make sure you are editing

the main.py file in

your Week 8 folder.

You can copy/paste the above commands one-by-one into your file. Or for your convenience I have put them all here:

import nltk

f = open("corpus/Watergate Summary_djvu.txt")

file_contents = f.read()

tokens = nltk.word_tokenize(file_contents)

print(tokens[0:10])

text = nltk.Text(tokens)

text.concordance("criminal")

text.similar("criminal")

text.common_contexts(["criminal","exact"])

Note that on line 7, I added a print() command to

display the contents of tokens. In the interactive

shell, you can simply type a variable name to display its value

but when running a Python file, you have to explicitly

call print() to display it.

Let's add some additional print() messages to make

the output a litlte friendlier:

import nltk

f = open("corpus/Watergate Summary_djvu.txt")

file_contents = f.read()

print("\nThe first 10 tokens:")

tokens = nltk.word_tokenize(file_contents)

print(tokens[0:10])

text = nltk.Text(tokens)

print("\nConcordance analysis for 'criminal':")

text.concordance("criminal")

print("\nWords similar to 'criminal':")

text.similar("criminal")

print("\nCommon contexts for 'criminal' and 'exact':")

text.common_contexts(["criminal","exact"])

Another note on style: In my class notes, whenever I format code in blue with a dotted underline, it is to signify that this code has been added from a previous snippet. I hope this will make it easier for you to follow along my examples and see precisely what I have added from one example to the next.

The special character \n here designates

a newline, i.e. a line break,

and it is how you can display a line break from within a

computer program. This special designation of \n

for newline is common across nearly all

programming languages.

If you'd like to make things a little easier to work with, add a variable to act as a placeholder for the word you are interested in, then use that variable throughout your program:

import nltk

f = open("corpus/Watergate Summary_djvu.txt")

file_contents = f.read()

print("\nThe first 10 tokens:")

tokens = nltk.word_tokenize(file_contents)

print(tokens[0:10])

text = nltk.Text(tokens)

word_of_interest = "criminal"

print("\nConcordance analysis for '" + word_of_interest + "':")

text.concordance(word_of_interest)

print("\nWords similar to '" + word_of_interest + "':")

text.similar(word_of_interest)

print("\nCommon contexts for '" + word_of_interest + "' and 'exact':")

text.common_contexts([word_of_interest,"exact"])

One more note on style: When I format code text in orange with a dashed underline, it signifies code that I have changed from one snippet to the next.

Here I am using the character +

to concatenate multiple strings, i.e. to stitch

them together.

This technique allows you to change only the value of that variable on line 12, save, and re-run your code to perform all of this analysis on a different word. Try changing line 12 to a different word, re-running, and seeing what you get.

Homework

-

Read this article:

Hannah Zeavin, "Auto-Intimacy", from The Distance Cure.

Post a reading response into this document. -

Visit archive.org and search for a large text file. (Or you can look somewhere else if you'd like.)

Take the

main.pyfile that you have from above, using the techniques that we discussed in class, and modify it to work on the text file that you have found.Experiment with these techniques, have a look at chapter 1 from The NLTK Book, and see if you can do anything different or in addition to the above.

Can you find out "something interesting" about your text with this analysis? What types of questions do these analysis techniques allow you to ask? What types of questions do they not allow you to ask? What are some situations or research questions in which these techniques might prove useful? Write up 100-200 words of reflection in response to these questions. Put these comments in a file called "Week 8 reflections" (Google Doc or Microsoft Word) and put them in your Week 8 folder.

Upload your Week 8 folder to your Google Drive work folder by Tuesday at 8pm.

Please note: Just to be clear, both parts of this assignment are due for everyone in class to complete by Tues, 8pm. I'll be reviewing your work and we'll discuss your reading responses in class.