Code as a Liberal Art, Spring 2025

Unit 2, Lesson 2 — Thursday, March 20

Data structures and data scraping

Introduction, background, context

Digital stuff is often thought of as divided into algorithms and data. Sometimes these categories are described as, on the one hand, computer programs of executable instructions or actions, and on the other hand, the digital objects that those instructions act on. We might even go as far as calling this a kind of "digital ontology", in the sense that all digital stuff can plausibly be divided into one of these two categories.

In Unit 1 we looked at some algorithms, made some patterns, and generated some digital files, but we didn't really think about those files as data, per se. If you generated graphical patterns using loops and the modulo operator, they likely looked somewhat like data visualizations, but there wasn't actually any data there that we were visualizing. It's almost like they were visualizations of pure process, visualizing the patterns of algorithm. Similarly for your algorithmic collages: for the most part these used randomization as the primary composition technique, although if you wrote code to process and filter any of your images, you were already beginning to think of them as data to be analyzed in some sense.

For Unit 2, we are turning our attention more toward data. Where Unit 1 focused on formalist digital explorations and using algorithms for creative, generative production, Unit 2 will shift to how data is structured, how it is gathered, and how we can use data and algorithms as the basis for new output.

Data scraping? Cleaning up after a February 5, 2008 ticker

tape parade celebrating the New York Giants winning the Super

Bowl. (More

info available

here from Flickr

user wireful)

Data scraping? Cleaning up after a February 5, 2008 ticker

tape parade celebrating the New York Giants winning the Super

Bowl. (More

info available

here from Flickr

user wireful)

Table of contents for the topics today

(Click each to jump down to the corresponding section.)

- Data structure: lists, tuples, and dictionaries

- Data scraping

- Scraping tools

- Downloading a webpage with Python

- Parsing HTML

- A web crawling algorithm with a queue

- A data structure for data scraping

- Wrapping up & homework

I. Data structure: lists, tuples, and dictionaries

The topic of data structures is an enormous one in computer science. Many undergraduate computer science curricula will dedicate an entire semetser just to learning various data structures, when they should be used, and how they operate. Finding and optimizing data structures is an ongoing area of computer science research today. We will be thinking about data structures throughout the semester (indeed, we already have) but we will only spend a few weeks with them as the center of our attention, so the scope of this focus will be necessarily brief. We will focus on a few of the most commonly used data structures, how they are used in Python, how they can be combined, and when you might want to use one over another.

We'll start our work today by working interactively (entering commands into the Python shell and immediately seeing their output results) and then build to craft Python code files.

Make a new folder to work with this week. I'll call

mine Unit 2, Lesson 2 and recommend

that you do the same. Open a new window in VS Code and drag that

folder in.

I.a. Lists

We have already been working with one common Python data structure: lists. A list is an ordered sequence of values. In Python, those values can be heterogenous. In other words, they can all be different types. Other programming languages are not so forgiving and require all items in a list to be the same kind of thing - i.e., all numbers, or all text. But in Python we can mix it up.

Let's look at some of the operations of lists by working interactively in the Python shell. Many of these commands we have already seen and been using, but I'm putting them here as a more formal introduction and as a lead in to other data structures we'll be talking about today.

-

assign a list to any variable using the assignment

operator (i.e. the equal sign

=) - create a new list using square brackets

- new lists can be created empty or with items in them

-

use

print()to print a list with all its contents -

use

append()to add new items to the end of a list -

use

pop()to remove an item from a list, specified by its index number — e.g.pop(0)to remove the first item (remember, always starting with0) - use square bracket notation with a number index a list, which means to access a specific value in a list based on it's position

-

get the size of a list with the

len()command -

check if a value is in a list with the

incommand

And here is how each of those commands would look in the Python

shell. (Remember: pay careful attention to the command

prompts in my notes here. $ indicates

the default command prompt that you get in Terminal,

while >>> is the Python shell,

which you can run by typing python3

or python at the command prompt.)

$ python3

>>> my_list = []

>>> my_list = [101, 13, 42, "a", "N", "xyz"]

>>> print(my_list)

[101, 13, 42, 'a', 'N', 'xyz']

>>> my_list.append("spaghetti")

>>> print(my_list)

[101, 13, 42, 'a', 'N', 'xyz', 'spaghetti']

>>> my_list[0]

101

>>> len(my_list)

7

>>> 101 in my_list

True

>>> my_list.pop(0)

101

>>> len(my_list)

6

>>> 101 in my_list

False

Again, this process of using square bracket notation to access a

specific item in a list by number is

called indexing the list. The number in

brackets refers to the position in the list of the value that

you want, and is called the index. Remember

that list positions always start at 0. You can also

use variables as the index:

>>> i = 3 >>> my_list[i] 'a'Using variables as the list index like this is useful because then you can reference the items from a list within a loop for example. A process I usually describe as "looping over a list". Here I'm looping over just a part of this list, just to illustrate that one needn't loop over the whole thing:

>>> i = 3 >>> while i < 6: ... print(my_list[n]) ... i = i + 1 ... a N xyz

We have already been using lists for several things. We saw how

lists can be used to represent the data of a digital image. In

this case, each value of the list represented a pixel color,

usually specified as (red, green, blue). So if an

image was 10 pixels wide and 10 pixels tall, this list of pixel

color data would be 100 in total (10 x 10).

We have seen two ways of accessing images as data:

-

by looping over image data as one continuous list of pixel

values using the PIL command

getdata(), and -

by looping over the image with a nested

loop iterating over both

xandyvalues using the Python Image Library commandgetpixel().

In the first method above, as we have

discussed, getdata() returns a single list. To

review, we can see this in action by examining this very small

image

(u2-1-small-image.png)

which is only 4 pixels tall and 4 pixels wide.

Right-click on the image file link above and save it into your folder for this week. Then open a terminal in VS Code and type the following commands:

$ python3

>>> from PIL import Image

>>> im = Image.open("u2-1-small-image.png")

>>> im.size

(4, 4)

>>> pixels = list(im.getdata())

>>> pixels

[(0, 0, 2), (118, 119, 121), (112, 113, 115), (0, 0, 0),

(76, 77, 79), (159, 160, 162), (150, 151, 153), (255, 255, 255),

(62, 63, 65), (95, 96, 98), (104, 105, 107), (50, 50, 52),

(48, 49, 51), (76, 77, 79), (76, 77, 79), (44, 44, 44)]

>>> len(pixels)

16

>>> pixels[7]

(255, 255, 255)

Note that the length of this list (len(pixels)) is

16. Why? (Highlight to see.) Because an

image that is 4 pixels wide and 4 pixels tall has 4x4=16 total

pixels. Now if you wanted to operate on this image data,

you would not do the nested loop that I spoke

about in Unit

1 Tutorial 4, but

instead you could loop over the image as one sequence of pixel

values — in other words, with one single for

loop. To illustrate this, notice how I

wrote pixels[7] which returned the eigth pixel of

the image (which in this case is white: 255, 255, 255). Of course, working this

way it would be difficult to think about the specific x,y

location of a given pixel in the image. But there are advantages

of working both ways.

It is important to keep in mind that data structures can contain other data structures.

To illustrate this, let's think about a different data structure for working with digital images. Because a digital image is typically a two dimensional object (it has a width and height), it probably makes sense to think about the pixels of a digital image as stored in a two dimensional data structure. To achieve this, instead of one list of many pixel values, we could make a list corresponding to the rows, then each item in that list is itself another list which corresponds to all the pixels in that row. In the Python shell, for the above 4 x 4 image, that would look like this:

>>> img = []

>>> img.append( [(0, 0, 2), (118, 119, 121), (112, 113, 115), (0, 0, 0)] )

>>> img.append( [(76, 77, 79), (159, 160, 162), (150, 151, 153), (255, 255, 255)] )

>>> img.append( [(62, 63, 65), (95, 96, 98), (104, 105, 107), (50, 50, 52)] )

>>> img.append( [(48, 49, 51), (76, 77, 79), (76, 77, 79), (44, 44, 44)] )

>>> img

[ [(0, 0, 2), (118, 119, 121), (112, 113, 115), (0, 0, 0)],

[(76, 77, 79), (159, 160, 162), (150, 151, 153), (255, 255, 255)],

[(62, 63, 65), (95, 96, 98), (104, 105, 107), (50, 50, 52)],

[(48, 49, 51), (76, 77, 79), (76, 77, 79), (44, 44, 44)]]

Notice that I create img as an empty

list [], and then each time I

called append(), I am adding another list

into img, because the argument

to append() each time is also contained in square

brackets.

So what I have here is a list of lists.

Common student question. Does Python somehow know this is an image? No, Python does not think of this data structure at this point as automatically an image in any special way. It is just a data structure for storing numbers, that we are thinking of as something that represents pixel values. We could use this data, structured in this way, to pass in to a library like Pillow to make it into an image. This illustrates how data can have an abstract quality. We could also use this same data structure if we were implementing some kind of spreadsheet application, for example. Or really, any other kind of data that had a "two dimensional" structure. Say, if we were implementing a chess or checkers game.

In this arrangement, the text in blue would correspond to the second row of pixels. You could access this single row of this data with a regular index:

>>> img[1] [(76, 77, 79), (159, 160, 162), (150, 151, 153), (255, 255, 255)]And you could access a single item in that row like this: (Note that

row is not a special keyword here.)

>>> row = img[1] >>> row[3] (255, 255, 255)You could shortcut this by using two indices at once, like this:

>>> img[1][3] (255, 255, 255)And you can even use the double index notation to modify values, like this:

>>> img[1][3] = (0,0,0)

>>> img

[[(0, 0, 2), (118, 119, 121), (112, 113, 115), (0, 0, 0)],

[(76, 77, 79), (159, 160, 162), (150, 151, 153), (0, 0, 0)],

[(62, 63, 65), (95, 96, 98), (104, 105, 107), (50, 50, 52)],

[(48, 49, 51), (76, 77, 79), (76, 77, 79), (44, 44, 44)]]

Notice that I've changed the last item in the second row, as

indicated by my indices.

(jump back up to table of contents)

I.b. Tuples

Each of these pixel values with its red, green, and blue components (or hue, saturation, and brightness if you're working with that mode) is called a tuple. This weird word comes from thinking about: a pair, a triple, a quadruple, quintuple, sextuple, septuple, octuple, etc, etc, and wanting to generalize that idea. Hence, an n-tuple, or simply, tuple.

List lists, Python considers tuples as sequences: they are stored in sequential order, meaning you can access their values by numerical index. The main difference between a list and a tuple is that a tuple cannot change. It's called immutable.

So you can think of a list as a dynamic way to store an ordered sequence of values, that may grow or shrink as data is added, removed, filtered, sorted, or re-organized. But a tuple is a way to represent two or three numbers together. Like the x, y coordinates of a point on a grid.

Here is an example of creating a tuple, accessing its individual values by index, and attempting to modify the second value:

>>> t = (50, 75) >>> t (50, 75) >>> t[0] 50 >>> t[1] 75 >>> t[1] = 100 Traceback (most recent call last): File "<stdin>", line 1, in <module> TypeError: 'tuple' object does not support item assignment

Of course, we have already seen tuples in action as well: PIL

uses them to hold (x,y) coordinates as well as

the (r,g,b) color values of a single pixel.

Now that you know what a tuple is, hopefully that double parenthesis syntax that we've already been using might look a little less strange. When we try to get or put a specific pixel value using PIL, it looks like this: (from Unit 1, Lesson 4)

pixel = new_img.getpixel( (x,y) )The strange double parenthesis?

( ( ) ) are because

this command takes one single argument, but that single

argument is a tuple containing two values. So

you are really passing in a single tuple here

with the value (x,y). Looks weird, but maybe now a

little clearer.

(jump back up to table of contents)

I.c. Dictionaries

One of the most powerful data structures in

Python is the dictionary (often called

a dict). Like a regular dictionary, in which you

can look up a word and get a definition, in a Python dictionary

you can look up a key and access

a value. Another way of describing this is that

a dictionary creates a mapping between keys and

values; or, we might say it creates a set of key-value

pairs.

Dictionaries have some operations in common with lists, and some

differences. A dictionary is created with curly

braces; new key-value pairs are added by specifying the

key in brackets; values are also retrieved using square brackets

like with lists, but the indices need not be numbers; you can

get the number of key-value pairs in the dictionary

with len(); and (importantly!) you can

check if a key is already in a dictionary with

the in command:

>>> d = {}

>>> d["hair"] = "orange"

>>> d["species"] = "monster"

>>> d["name"] = "Gritty"

>>> print(d)

{'hair': 'orange', 'species': 'monster', 'name': 'Gritty'}

>>> d["hair"]

'orange'

>>> len(d)

3

>>> "name" in d

True

>>> "age" in d

False

Just like with lists and tuples, you can combine dictionaries with lists in all sorts of ways. First I'll put a list into my dictionary, then I'll create another dictionary and put them both in a list:

>>> d["lucky numbers"] = [ 4, 11, 42, 101 ]

>>> d

{ 'hair': 'orange', 'species': 'monster', 'name': 'Gritty',

'lucky numbers': [4, 11, 42, 101] }

>>>

>>> d2 = {}

>>> d2["hair"] = "brown"

>>> d2["species"] = "human"

>>> d2["name"] = "Rory"

>>>

>>> ds = [ d, d2 ]

>>> ds

[ { 'hair': 'orange', 'species': 'monster', 'name': 'Gritty',

'lucky numbers': [4, 11, 42, 101] },

{ 'hair': 'brown', 'species': 'human', 'name': 'Rory' } ]

We saw dictionaries in action in the previous technical lesson when we were working with Exif data.

This all might seem fairly abstract at the moment, but we'll work through some examples today which will hopefully add some concrete understanding and clarity. And the first exercise in the homework for this week asks you to think about how you might combine data structures in interesting ways.

(jump back up to table of contents)II. Data scraping

Now that we have this wonderful, rich toolbox of data structures to work with, let's start thinking about some new ways of getting data into them.

One way to do this is a technique known as web scraping, which essentially means using tools like computer programs and scripts to automate the tasks of gathering data from public web sites. I'm not sure the origins of the term "scraping", but it is also related to web crawling, a term used to describe what search engines do when they index the web by using algorithms to automatically comb through all websites. It would be interesting to perhaps trace the differing origins of web scraping and web crawling and what types of techno-political work these various terms perform in how we understand these operations and who does them. They are both forms of automated web browsing - maybe scraping is the maligned version of this practice, which we are not supposed to do, while crawling is the friendlier and more widely accepted version that larger institutions more typically do. To finely parse the technical distinction, I think we could say that web scraping is about extracting data from one or more websites, while crawling is more about finding or discovering URLs or links on the web and how they form interrelated networks.

(jump back up to table of contents)Interestingly, there has been recent litigation around the legality of web scraping. University of Michigan legal scholar Christian Sandvig (whose article about sorting we read during the first week of the semester) was one of the plaintiffs in these proceedings.

In my opinion, it is difficult to understand how this could be considered illegal when gathering data in this way is essentially the business model of Google and every search engine or platform that indexes web content. It is almost as if when industry does it, it's called web crawling, but when individuals do it, it's referred to more menacingly as web scraping, and becomes legally challenged.

We will read about the politics of scraping (and crawling) in the Sam Levigne text for next week, which we'll discuss next class.

II.a. Scraping tools

To do this work, we're going to need some new tools, in the form

of two new Python libraries: the requests

library, which facilitates the downloading of webpage content

from within Python, and Beautiful Soup, a

library that offers utilities for parsing web pages. Install

them at the command line (not in the Python shell),

with the following commands (or whatever variation

of pip worked for you when installed

Pillow):

$ pip3 install requests $ pip3 install beautifulsoup4

If you see a "WARNING" about yourpipversion, that does not mean anything is necessarily wrong. But if you get an error that says something aboutthe latest version of pip, you can try upgradingpipwith the following command:$ pip3 install --upgrade pip

You'll see some output about "downloading", hopefully a progress bar, and hopefully a message that it's been "Successfully installed". Test that your installation worked by running the Python shell and typing two commands, like this:

$ python >>> import requests >>> from bs4 import BeautifulSoupIf your shell looks like mine (no output) then that means everything worked. (jump back up to table of contents)

II.b. Downloading a webpage with Python

To get started, let's do some experimenting in the interactive shell for a minute before we create any Python code files. Let's start by downloading the front page of the New York Times website:

>>> import requests

>>> response = requests.get("https://nytimes.com")

>>> response

<Response [200]>

If you see something like this, it means that this worked!

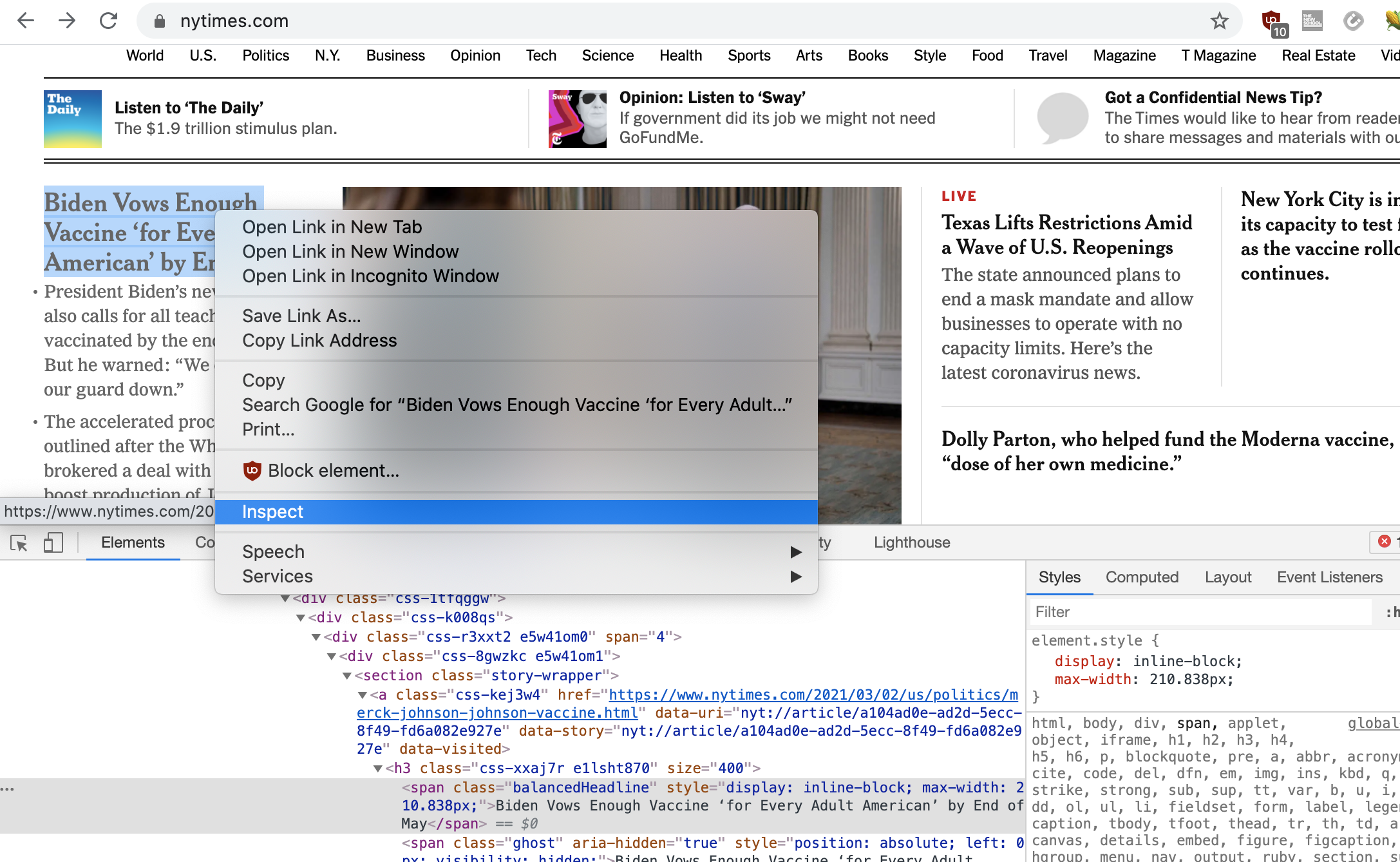

The web is structured around requests and responses. Usually, you would type a URL or click on a link in your web browser, and your browser on your computer would make a request to a program on another computer called a server. Your browser's request is specially formatted in accordance with HTTP: the hypertext transfer protocol. A web browser and web server are both just computer programs that are able to encode and decode the rules of this protocol. When the server receives a request, it replies with a response, which your browser receives and uses to extract the HTML that is rendered to you in your browser window.

The blue parts of this diagram are more or less what

comprise HTTP: the hypertext transfer protocol.

The blue parts of this diagram are more or less what

comprise HTTP: the hypertext transfer protocol.

What we've just done with the above lines of code is all of that but without the user-friendly graphical user interface (GUI) of the browser. Sometimes people call this a "headless" mode: doing the work of a GUI application but without the visual display. In effect, we are using Python code as our web browser: making requests, and receiving responses.

As is often the case, doing things in a manner like this can be more tedious and difficult than using the user-friendly GUI of a web browser, but this modality has advantages as well: by making HTTP requests in this way, we can automate the action of requesting web pages and unlock the power of applying algorithms to this proces, treating web pages like data that we can write code to process.

HTTP specifies various numeric codes to signify the status of responses. The response code 200 signifies a successfully fulfilled request, which is what you should see in the Python shell. Now we have to figure out what to do with this response.

The response object contains various fields that you can access to learn about the response:

-

response.status_codeis the aforementioned HTTP status code. -

response.headerscontains meta data about the HTTP request such as date, time, encoding, size, etc. -

response.cookiesincludes cookies that the server sent back to you. Normally your browser would save those, which is how websites can track you across the web, but we can ignore them. -

response.elapsedindicates the total time that the server needed to fulfill this request. -

and

response.textis the main payload: the entire content of the response from the server, containing a large amount of plain text, HTML, Javascript, and CSS code.

response.text, that is way

too big. If you want to get a teaser about what this contains,

try typing the following:

>>> response.text[:500] '<!DOCTYPE html>\n<html lang="en" class=" nytapp-vi-homepage" xmlns:og="http://open graphprotocol.org/schema/">\n <head>\n <meta charset="utf-8" />\n <title data-rh="true"> The New York Times - Breaking News ...That is strange new Python syntax that uses a colon (

:) inside square brackets is called

a slice: it returns part of a

sequence. (Remember, in Python

a sequence can be a list,

a string, a tuple, and even

some other things.) In this case, this slice

returns the first 500 characters of the string. That will show

you a somewhat readable bit of the response.

What should we do with this response? One thing we can do is simply save the response to disk, as a file. So far you have worked with files in Python as a way to read text, and as a way to write pixel data to make images. But you can also work with files as a way to write text.

(jump back up to table of contents)II.b.i. Saving a response as a file

We could save the entire HTML contents, like so:

>>> nytimes = open('FILENAME.html', 'wb')

>>> for chunk in response.iter_content(100000):

... nytimes.write(chunk)

Because of the way that files work, it is better to write the

file to disk in these large segments of data that we called

here chunk, each of 100000 bytes (100KB). Now,

whatever you specified as FILENAME.html will exist

as an HTML file in your current working directory. You can open

that in Finder/Explorer, and double click the file to open it in

your browser.

You have just used Python code to download the full contents of one web page. Now let's see how we can start to automate this process in different ways.

(jump back up to table of contents)II.b.ii. Processing response text

What else can we do with the response? Well if you wanted to

take human-readable textual content of this response and start

to work with it as you have been doing — for example, to

generate things like word frequency counts, finding largest

words, and other algorithmic processing, you can start working

with response.text. However, that is

the full contents of the HTML page returned from the

server, which, as you can see when you examine a slice of that

data, contains a bunch of HTML tags, Javascript code, and other

meta data that probably wouldn't be relevant to your

textual analysis.

(Unless ... Maybe someone would want to do textual analysis on HTML code, treating it like a human language text, and analyze word lengths, frequencies, etc. Could be an interesting project.)

To start working with the content of this response in a way that focuses on the human-readable textual content and not the HTML code, we can parse the HTML using the Beautiful Soup library. Parsing is the term for dividing up any syntax into constituent elements to determine their individual meanings, and the meaning of the whole. Web browsers parse HTML, and then render that document as a GUI.

As an interesting first parsing operation, let's use Beautiful Soup simply to extract all the plain textual content, stripping out all the HTML:

>>> from bs4 import BeautifulSoup >>> soup = BeautifulSoup(response.text, "html.parser") >>> plain_text = soup.get_text() >>> plain_text[:500] '\n\n\nThe New York Times - Breaking News, US News, World News and Videos\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nContinue reading the main storySectionsSEARCHSkip to contentSkip to site indexU.S.International CanadaEspañol中文Log inToday’s Paper ...'On the second line, we are passing

response.text in

to the BeautifulSoup object. On the next line,

we're telling the BeautifulSoup object, which I

have called soup but you could call anything, to

give us just the text, which I'm setting into a variable

called plain_text, but again, you could call

anything. Now, when I print a slice of the

first 500 characters to the shell, you can see that it has

stripped out all the HTML tags and meta data, giving me just the

text — well, also some strangely encoded characters, and

the spacing is a little messed up, but it looks like something

you could work with. For example, if you look at another slice

of the document, it looks pretty good:

>>> plain_text[15000:15500] 'hat the Rise of Clean Energy Looks Like From SpaceNew data from a constellation of satellites 250 miles above Earth’s surface shows how solar and wind have taken off in recent years.The New York TimesWhy the Fed’s Job May Get a Lot More Difficult ...'We could save this plain text to a file as well if you wish:

>>> nytimes_plain = open('FILENAME.txt', 'w')

>>> nytimes_plain.write(plain_text)

19663

>>> nytimes_plain.close()

Note the .txt file extension to indicate this is

plain text and not HTML. Note also that in open()

I am using 'w' this time instead

of 'wb' as above. Since I'm using a string here

instead of a response object, this other mode of

writing a file is possible and simpler.

(jump back up to table of contents)

II.c. Parsing HTML

But Beautiful Soup parsing let's us do much more than just strip out all the HTML tags of a web page, it actually let's us use those HTML tags to select specific parts of the document. let's use Beautiful Soup to try to pull out the clickable links that connect pages together. Then use a data structure to store those pages and links in some way. And finally, at the end of class we'll see how to create a representation of this as a visual sitemap.

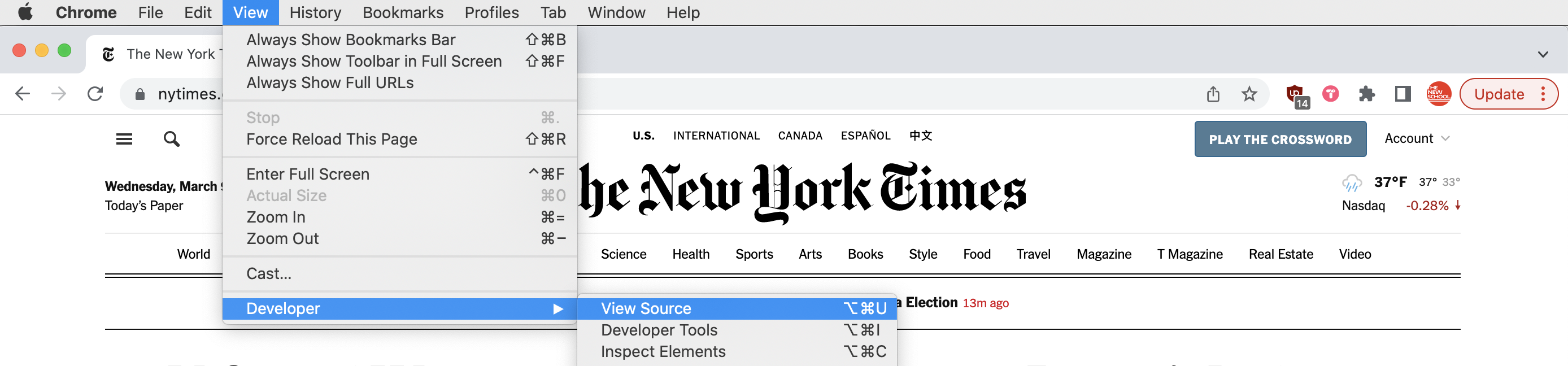

First, in order to understand how this will work, we have to poke around a bit in the structure of the HTML that we want to parse. You may already know a little HTML and CSS. We'll come back to it in the final unit of the semester. For now, a few small details will suffice to do this current task.

Open up nytimes.com in your browser. If you are

using Chrome, you can right-click anywhere in the HTML and

select "Inspect" to view the underlying code of this

webpage. (If you don't have Chrome, you can still do this with

any up-to-date browser, but I can't offer details instructions

for each one. One Firefox for example, it is almost the same as

Chrome: right-click anywhere in the page, and select "Inspect

element".) Doing this will open up a kind of developer console,

comprised of many tools that you can use to disect a webpage,

and the various requests and responses that generated it.

Play around a bit. Right-click on several parts of the page, select "Inspect", and then see what the underlying HTML code is for this section of the document. Also, you can move the mouse pointer around in the "Elements" tab in the developer console, and as you highlight different HTML elements, you should see those highlighted in the GUI area. This can be a very effective way to learn HTML/CSS. Again, we'll come back to this technique later in the semester.

In addition to the Inspect tool, you can also simply view the source code for any given page. Again in Chrome, you can do this simply by clicking View > Developer > View Source, and for other browsers it is similar.

For now, let's just focus on how links between pages are

constructed. This is done with an HTML element known as

an <a> tag, whose syntax looks like this:

<a href="http://url-of-this-link" other-properties>Visual text of the link</a>That syntax will display "Visual text of the link" to the user, and when the user clicks that link, it will take them to the URL

http://url-of-this-link.

Beautiful Soup gives us functions in its API specifically for parsing and retrieving elements of an HTML file that match some criteria. (See the Beautiful Soup documentation, "Searching the tree" section.)

What I'm interested in at the moment is selecting all

of the <a> tags that create links to other

pages.

Specifically, Beautiful Soup offers functionality for doing this

with a command called find_all(). We can

demonstrate it in the Python shell like this:

>>> a_tags = soup.find_all("a")

The variable name a_tags here is arbitrary and can

be anything you'd like.

Now you could say a_tags[0] to access the first

HTML <a> in Python code, or you could

say for a in a_tags: to loop

over all <a> tags, setting each one

to the variable that I'm here calling a.

Accessing the URL that a link points to is also very

straightforward. Notice that my sample line of HTML code above

includes the following

text: href="http://url-of-this-link". This is

called an attribute or

a property and we can access it through

Beautiful Soup with a comamnd called get().

So to loop over all <a> tags in a HTML page

and print out the URLs that they point to would look like this:

for a in a_tags:

print( a.get('href') )

(jump back up to table of contents)

II.d. A web crawling algorithm with a queue

To achieve web crawling behavior, what I would like to do is access a web page, process that page in some way, and then identify all links on that page, and process each of those pages in turn. In this way we can have our program automatically navigate some network of links on the web.

We'll do this with a data structure known as queue. A queue is typically implemented using a list, so it isn't entirely a different data structure, strictly speaking. It is more like a kind of usage pattern - in other words, it is a common way one you might use a list. We'll do this by adding items to the end of the list, and removing items from the beginning. This is a pattern called a FIFO queue, or, "first in, first out".

Let's see how it's done ...

Example 1: Web crawling with a queue, an incomplete example. (This code has some issues discussed below.)

import requests

from bs4 import BeautifulSoup

url_queue = []

url_queue.append("https://nytimes.com")

while len(url_queue) > 0:

next_url = url_queue.pop(0)

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

# Process webpage here using BeautifulSoup

a_tags = soup.find_all("a")

for a in a_tags:

url = a.get('href')

if url not in url_queue:

url_queue.append(url)

Let's analyze this algorithm. It starts by creating a new

list variable called url_queue. This variable name

is arbitrary and could be called anything, but this name

explains what it will be used for. Next we start by adding one

URL to this list: "http://nytimes.com", meaning

that the length of the list will be one -

i.e., len(url_queue) would equal 1. Next we see

a while loop that will repeat as long

as len(url_queue) is greater than zero. Well that

condition is currently met so the loop runs. Inside that loop,

I'm using the new list operation pop() that I

explained above. This removes the specified

item from the list (in this case item 0) and sets

it to the variable next_url. That variable name too

is arbitrary and could be anything. Now we

use requests to access the HTML page,

and BeautifulSoup to parse it. As the comment

indicates, you would probably include additional code in this

section to process the page in some way. Perhaps you'd want to

search for a keyword, find the most frequent word, or something

else. Next, we ask Beautiful Soup for all

the <a> tags on the page with the

command soup.final_all("a") and loop over the

results using the variable a_tags. In this loops,

we access every link on the page and if it is not already

in url_queue, then we add that new link to the

queue.

As you can see, url_queue is growing even as it is

also shrinking. It is removing one page each time

the while loop runs, but each time it does, it adds

more links to the queue - probably adding more than one.

Probably every page on the New York Times website

includes at least more than one link to other URLs. So, this

algorithm will keep adding more URLs onto the queue faster than

it can process them. In other words, the queue will keep getting

longer, will never be emptied, and the while loop will never

end. Let's add some logic to end the loop by serving as

a stopping condition. Here's one way to do that:

Example 2: Progress on the web crawler algorithm, but there are still some issues with this.

import requests

from bs4 import BeautifulSoup

url_queue = []

url_queue.append("https://nytimes.com")

page_count = 0

while len(url_queue) > 0 and page_count < 50:

next_url = url_queue.pop(0)

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

# Process webpage here using BeautifulSoup

a_tags = soup.find_all("a")

for a in a_tags:

url = a.get('href')

if url not in url_queue:

url_queue.append(url)

page_count = page_count + 1

This simple addition introduces a counter

variable which we increment by + 1

each time the loop runs, and then we add an additional condition

to the while loop. So this page will never process

more than 50 pages max. You can easily adjust that number to

modify the cap on your data scraper.

As one last issue to address, we have to think about a

complicated detail in how URLs are specified in HTML code. A URL

is like a file path, which we've been

thinking a lot about already this semester: a file

path is the location of a file on your local

computer. I've mentioned that a path can

be relative, meaning that it "starts" from the

some folder and refers to subfolders below it,

or absolute, meaning that it starts at the top

level folder of your computer, called root,

signified with /.

As I have also mentioned, a URL is like a file path on a remote

computer, a server, which you connect to through a network. When

an HTML page specifies a link with an <a>

tag, it can either be specified as a absolute

URL or an relative

URL. An absolute URL starts

with http://, and specifies the name of the server

and the complete path to a file on that

server. A relative URL specifies a shortened

part of that path which indicates to the browser how to find

that file relative to the current file. So for example,

the files might be in the same folder, or one might be in a

parent or child folder. Web scrapers have to carefully deal with

relative URLs.

Fortunately, the Python library urllib handles this process for us. We can use this library as follows:

Example 3: A complete web crawler algorithm that finally works out all the kinks.

import requests from bs4 import BeautifulSoup import urllib.parse url_queue = [] url_queue.append("https://nytimes.com") page_count = 0 while len(url_queue) > 0 and page_count < 50: next_url = url_queue.pop(0) response = requests.get(next_url) soup = BeautifulSoup(response.text, "html.parser") # Process webpage here using BeautifulSoup a_tags = soup.find_all("a") for a in a_tags: url = a.get('href') url = urllib.parse.urljoin(next_url,url) if url not in url_queue: url_queue.append(url) page_count = page_count + 1

This wonderful command handles a lot of complexity for

us. If url is

absolute, urllib.parse.urljoin(next_url,url) will

simply return that absolute URL. But,

if url is relative, then it is

relative in relation to the current page that we are parsing,

which is represented by next_url, and in that case,

the urljoin() command will properly resolve it into

an absolute URL that we can add to the queue.

II.e. A data structure for data scraping

I mentioned that the code above has a placeholder where the

comment simply says # Process webpage here using

BeautifulSoup.

I hope that this example fires your imagination and gives you

some ideas for different types of things that you could do with

a data scraper. You could replace that commented line with code

implementing any of the algorithms that we have talked about

thus far this semester. The soup variable

represents the parsed webpage that you are currently processing,

and so you could add code to access its text

with soup.get_text() and use that to count word

frequencies, to search for a keyword like a search engine would

do, or something else.

As an example, let's print the webpage URL, its title, the

length of its text content, and whether or not it contains the

word "COVID". Let's say for example that you wanted to do an

algorithmic discourse analysis to see what percentage of news

items were still talking about the pandemic. I'll also print

out page_count just so we can see how many pages

we've processed. We could implement that like this:

Example 4: A web crawler algorithm with some data scraping.

import requests

from bs4 import BeautifulSoup

import urllib.parse

url_queue = []

url_queue.append("https://nytimes.com")

page_count = 0

while len(url_queue) > 0 and page_count < 50:

next_url = url_queue.pop(0)

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

# Process webpage here using BeautifulSoup

print(page_count)

print("URL: " + next_url)

print("Title: " + soup.title.string)

print("Length: " + str( len(soup.get_text()) ) )

print("Contains 'COVID': " + str( "covid" in soup.get_text().lower() ) )

print()

a_tags = soup.find_all("a")

for a in a_tags:

url = a.get('href')

url = urllib.parse.urljoin(next_url,url)

if url not in url_queue:

url_queue.append(url)

page_count = page_count + 1

A couple details. len(soup.get_text()) calculates

the length of the text on the page. That has to be wrapped in

a str() or else the + operator would

complain about joining text to a number (a str and

an int). Also, I'm looking for "covid"

lowercase because I'm not sure how NYT may capitalize the word,

and I'm converting the whole page to lowercase to do the check,

hence: "covid" in soup.get_text().lower().

And now that we actually have some interesting data that we are scraping and processing here, let's think about how we might store this in an appropriate data structure. Think back to the homework for last week. What data structures might you use to store a bunch of webpages where you have some data for each one, and you also want to track how many other pages each page links to?

I'm going to demonstrate how to do this with a dictionary, where the keys will be the URL of each page (so they are definitely unique). Each key will point to another dictionary comprised of the page title, the length, and whether it contains the word "COVID". Let's implement that like this:

Example 5: A web crawler algorithm with data processing that saves the results into a data structure.

import requests from bs4 import BeautifulSoup import urllib.parse pages = {} url_queue = [] url_queue.append("https://nytimes.com") page_count = 0 while len(url_queue) > 0 and page_count < 50: next_url = url_queue.pop(0) response = requests.get(next_url) soup = BeautifulSoup(response.text, "html.parser") # Process webpage here using BeautifulSoup print(page_count) print("URL: " + next_url) print("Title: " + soup.title.string) print("Length: " + str( len(soup.get_text()) ) ) print("Contains 'COVID': " + str( "covid" in soup.get_text().lower() ) ) print() a_tags = soup.find_all("a") for a in a_tags: url = a.get('href') url = urllib.parse.urljoin(next_url,url) if url not in url_queue: url_queue.append(url) page = { "title": soup.title.string, "length": len(soup.get_text()), "mentions_covid": "covid" in soup.get_text().lower() } pages[next_url] = page page_count = page_count + 1

As the last detail here, let's implement the code that creates a list of all URLs that the current page links to, and stores that in the data structure:

Example 6: A web crawler algorithm saving results into a data structure, including a list of all pages linked to.

import requests

from bs4 import BeautifulSoup

import urllib.parse

pages = {}

url_queue = []

url_queue.append("https://nytimes.com")

page_count = 0

while len(url_queue) > 0 and page_count < 50:

next_url = url_queue.pop(0)

response = requests.get(next_url)

soup = BeautifulSoup(response.text, "html.parser")

# Process webpage here using BeautifulSoup

print(page_count)

print("URL: " + next_url)

print("Title: " + soup.title.string)

print("Length: " + str( len(soup.get_text()) ) )

print("Contains 'COVID': " + str( "covid" in soup.get_text().lower() ) )

print()

a_tags = soup.find_all("a")

linked_pages = []

for a in a_tags:

url = a.get('href')

url = urllib.parse.urljoin(next_url,url)

if url not in url_queue:

url_queue.append(url)

linked_pages.append(url)

page = {

"title": soup.title.string,

"length": len(soup.get_text()),

"mentions_covid": "covid" in soup.get_text().lower(),

"linked_pages": linked_pages

}

pages[next_url] = page

page_count = page_count + 1

(jump back up to table of contents)

III. Wrapping up & homework

Hopefully this gives you some building blocks in algorithmic thinking that you can play with. The homework for this week has some opportunities for you to experiment with these ideas. Have fun!