Code as a Liberal Art, Spring 2025

Unit 2, Lesson 2(b) — Tuesday, April 1

More on parsing with Beautiful Soup

Table of contents

- Anatomy of an HTML tag

- Examining an example: scraping a Wikipedia page

- Implementing our example: scraping a Wikipedia page

- A web crawiling example: the Wikipedia Philosophy game

- Wrapping up & homework

I. Anatomy of an HTML tag

HTML tags are defined by brackets which must

always come in pairs of open <

and close >.

The text between the brackets is said to be inside the brackets, and is what defines the tag.

Some common tags:

<div>, <h1>, <h2>, <h3>

(and <h4>

etc), <p>, <img/> <a>.

Most tags come in pairs, called the open

and close tag, which is indicated with a

forward slash /. For example: <p>

</p>. The text between the tags is said to

be inside the tag, and it is affected by

whatever properties the tag indicates.

Some tags like <img> end with a trailing

slash / before the last bracket and do not come

with a corresponding close tag because they

simply include an object on the page and do not have any

text inside them.

Tags can contain other tags, nested recursively like a classic Russian Matryoshka doll metaphor. This is another example of the modularity that we discussed when talking about the Manovich text.

Additional text can be included inside the brackets of the

opening tag, and are called attributes. These

are defined as key-value pairs indicated with

an equal sign = and they define additional

properties of the tag. For example:

<p id="42" class="user-profile" >

The most common attributes are id

and class.

The id attribute uniquely

identifies one tag on the page.

The class attribute is used to

define a group of tags on the page.

In web scraping, we use tag names, IDs, and classes to select certain tags (also known as elements) on the page from which we want to extract and save content. This process of selecting certain tags is also called targetting.

(jump back up to table of contents)II. Examining an example: a Wikipedia page

Let's look at an example. A Wikipedia page.

As a goal, let's try to request this page, download the response, and save the textual content.

Remember from Unit 2 Lesson 2 that we could simply make a

Beautiful Soup object and call get_text() on it, but

that would give all the human-visible text on the page,

including various menus, headers, footers, references, and other

things we wouldn't want if we plan to use this text as the

corpus for generating other human language.

So, our modified goal will be to request a page, downnload the response, and extract all the main text on the page, not including the various menus, etc.

We'll do this by first accessing the page manually, viewing the source, and trying to identify the tags, classes, and IDs that define where that main content of the page is.

Let's look at this URL:

https://en.wikipedia.org/wiki/Software

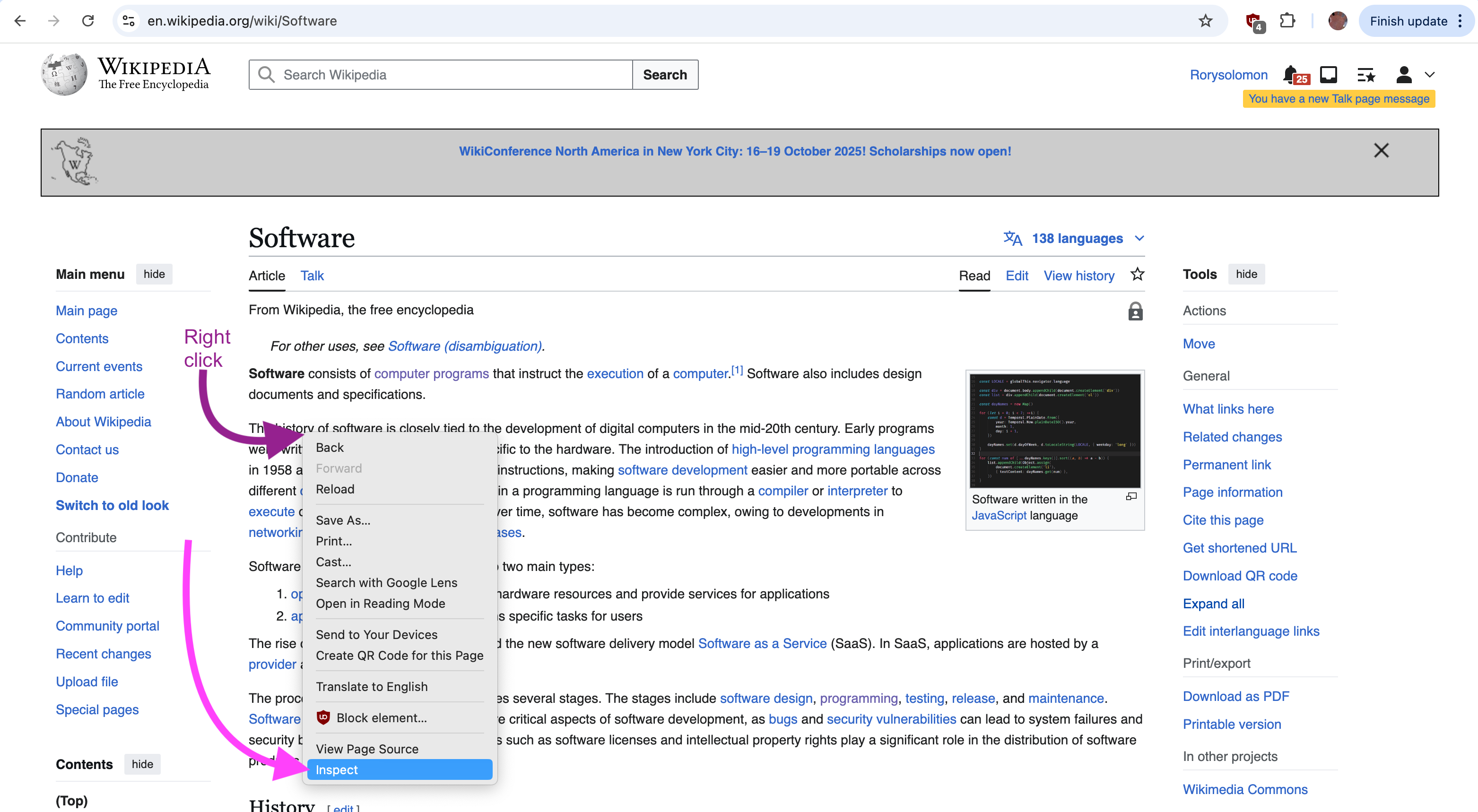

Right-click somehwere in the area of text that we're interested in. In this case, a main body paragraph. Click inspect to open the Developer Tools window (a.k.a. DevTools).

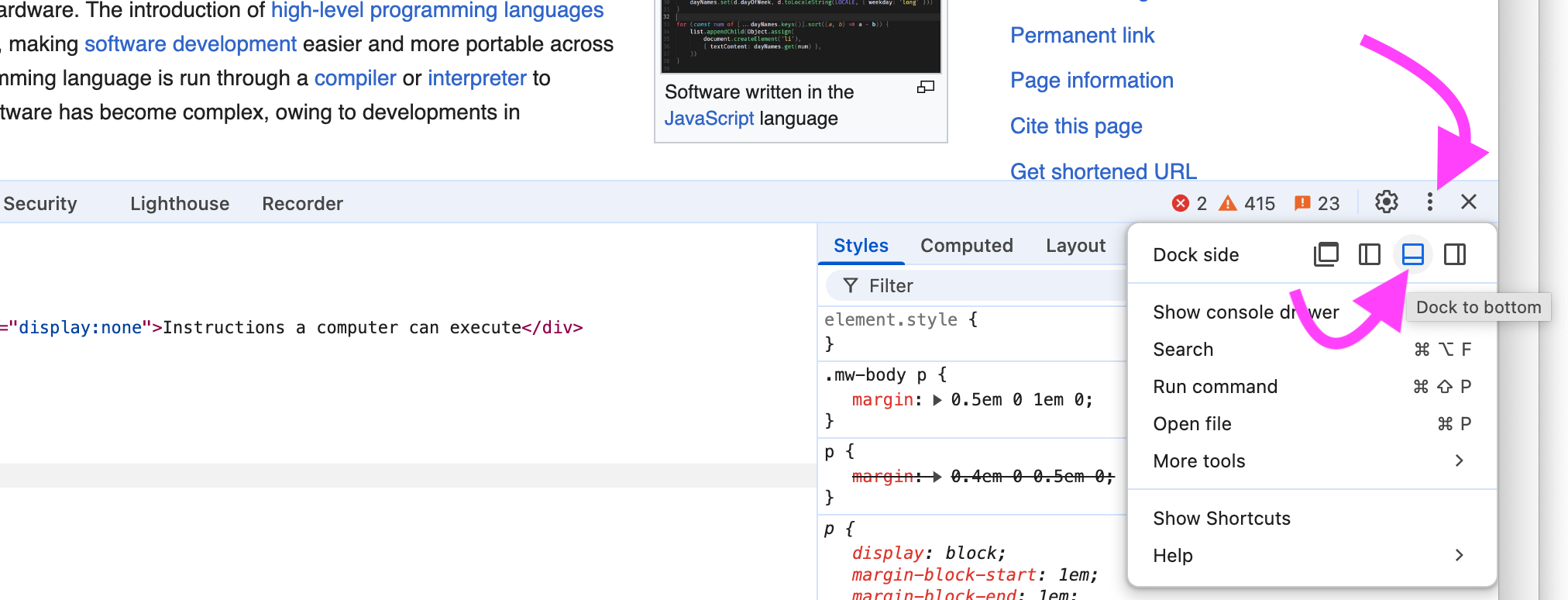

You can arrange your DevTools workspace however you'd like. I like putting the DevTools at the bottom of the browser window. I would advise you to do the same as I think it is most readable. But it's up to you. You can adjust the position of this window here:

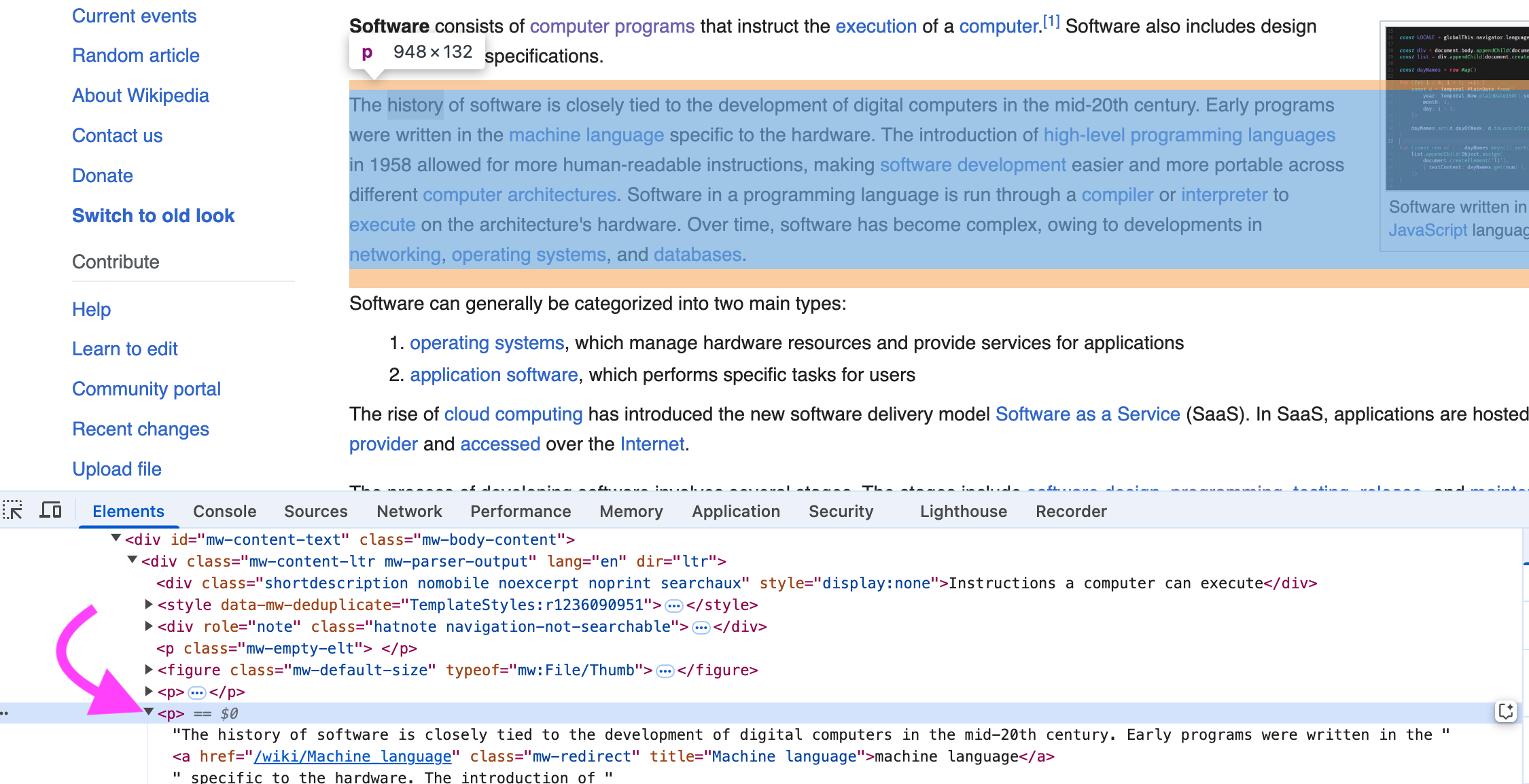

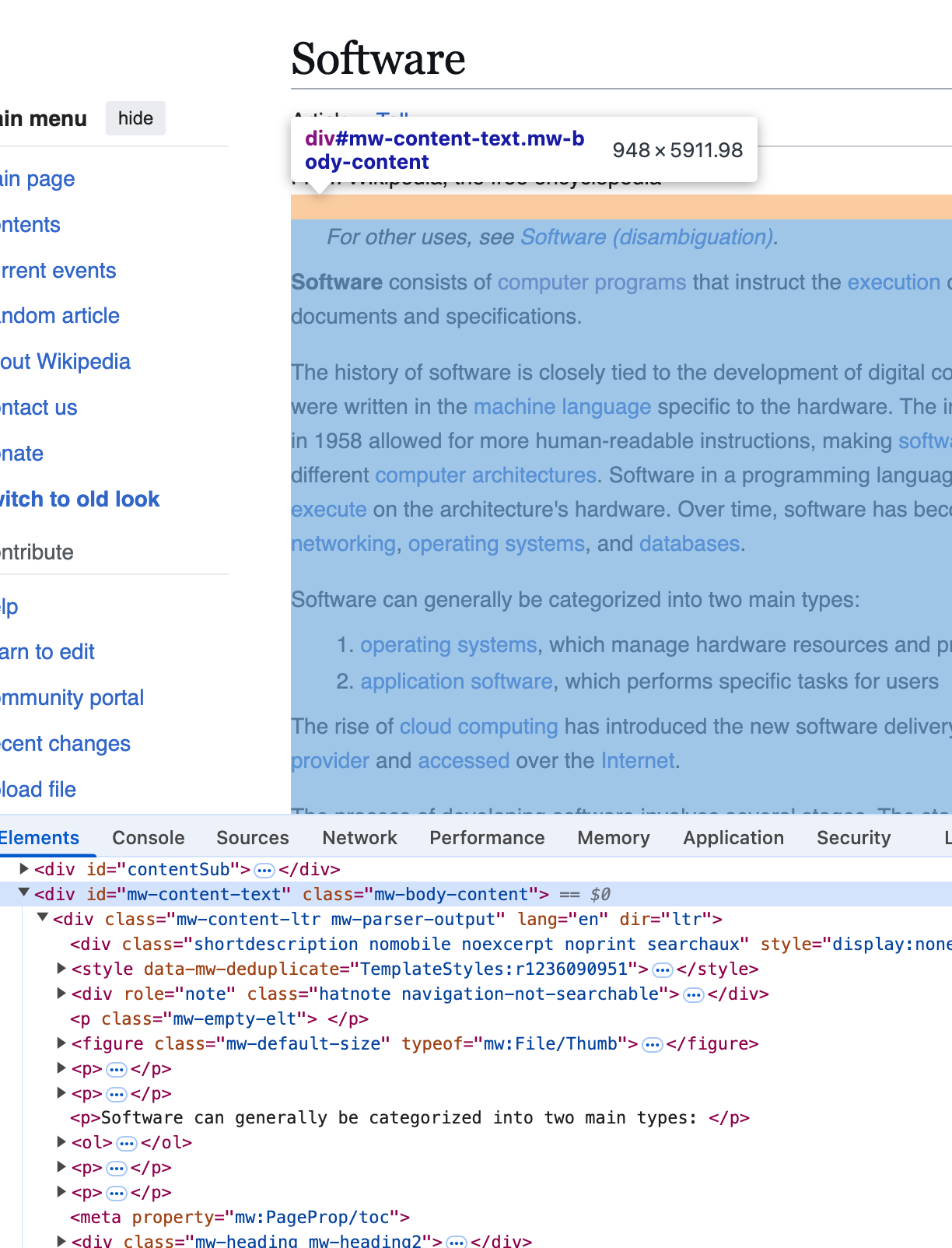

You can mouseover various tags in the Elements tab of DevTools and it will highlight that section of content on the page. You can also click the litlte arrows to expand/contract the content of each tag to see its contents.

From looking through the HTML elements of this page, it looks

like what we are interested in is the content of all

the <p> tags that are contained within

the <div> that has

attribute id="mw-content-text" .

OK, now our task is getting clearer. We need to parse this HTML

to access the textual content of only these tags —

i.e. all <p> tags

contained inside <div

id="mw-content-text">

... </div>.

This is precisely what Beautiful Soup is for.

(jump back up to table of contents)III. Implementing our example: a Wikipedia page

Let's make a new folder for this

work: Unit 2 Lesson 2b. And make a new

Python file in this folder. I'll call

mine parse.py.

Let's build on what we talked about in Unit 2 Lesson 2.

Add the two import statements at the top.

Request the page using the requests objects

and get(). Then make a BeautifulSoup

objects with the response.

import requests

from bs4 import BeautifulSoup

response = requests.get("https://en.wikipedia.org/wiki/Software" )

soup = BeautifulSoup(response.text, "html.parser")

The rest of this lesson will make extensive use of the Beautiful Soup documentation. I will be sharing certain commands from this library and explaining what they do. I advise you to refer back to this documentation as the definitive source for all Beautiful Soup commands, with full and precise explanations of what they do.

find_all():

the real powerhouse of Beautiful Soup.

It returns a list. Try printing a random paragraph.

import requests

from bs4 import BeautifulSoup

response = requests.get("https://en.wikipedia.org/wiki/Software" )

soup = BeautifulSoup(response.text, "html.parser")

all_paragraphs = soup.find_all("p")

print( all_paragraphs[4] )

OK, but we only want some paragraphs — i.e. the

ones inside the <div> with

that id.

Let's use find_all() to target that <div>:

import requests

from bs4 import BeautifulSoup

response = requests.get("https://en.wikipedia.org/wiki/Software" )

soup = BeautifulSoup(response.text, "html.parser")

all_paragraphs = soup.find_all("p")

print( all_paragraphs[4] )

main_div = soup.find_all(id="mw-content-text")

print( len(main_div) )

I don't want to print out

that entire <div>, so let's

just print the length of the list that find_all

returns.

As we might expect, the length is one! Why? Highlight: [Because id always indicates one unique item on the page.]

The find_all() command has an argument that could

be useful here: limit=X

soup.find_all(id="mw-content-text",limit=1)

But there is an even better way of doing this. When you know

that find_all() will only return one item, you can

use find(),

which searches for an element and always returns one

(or None if no matching elements found):

import requests

from bs4 import BeautifulSoup

response = requests.get("https://en.wikipedia.org/wiki/Software" )

soup = BeautifulSoup(response.text, "html.parser")

main_div = soup.find(id="mw-content-text")

print( len(main_div) )

Remember:

— find_all()

will always return a list of items, even if that list only

contains one item, or none.

— find() will always

return one item, but if none are found, that will be the

special Python null object None.

Now that we have the main div that we are looking for,

let's get all the <p> tags within that:

import requests from bs4 import BeautifulSoup response = requests.get(url) soup = BeautifulSoup(response.text, "html.parser") main_div = soup.find(id="mw-content-text")print( len(main_div) )main_p_tags = main_div.find_all("p")

And now that we have all the <p> tags,

let's make a new empty string variable, loop over

the <p> tags, get the text of each one, and

append that to the string:

import requests

from bs4 import BeautifulSoup

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

main_div = soup.find(id="mw-content-text")

main_p_tags = main_div.find_all("p")

main_text = ""

for p in main_p_tags:

main_text = main_text + p.get_text()

Now, main_text will contain all the text that we're

interested in from this article.

Finally, to fulfill the task

of exercise 3, let's add a

list of URLs, loop over that, and put all the above code into

that loop so it repeats once for each URL in the list. I will

also add a text file, and each time through the loop we will

write the variable main_text to that file.

import requests from bs4 import BeautifulSoup urls = [ "https://en.wikipedia.org/wiki/Software", "https://en.wikipedia.org/wiki/Compiler", "https://en.wikipedia.org/wiki/Database", "https://en.wikipedia.org/wiki/Operating_system", "https://en.wikipedia.org/wiki/Computer_network", "https://en.wikipedia.org/wiki/virus" ] wikipedia_file = open('wikipedia.txt', 'w') for url in urls: response = requests.get(url) soup = BeautifulSoup(response.text, "html.parser") main_div = soup.find(id="mw-content-text") main_p_tags = main_div.find_all("p") main_text = "" for p in main_p_tags: main_text = main_text + p.get_text() wikipedia_file.write(main_text)

Running this should produce one text file

(wikipedia.txt) that will contain the text of all

the articles we've added to our URL list. You can expand

this corpus by manually adding new URLs to the

list.

We will use a corpus like this as the basis for the next part of the project which is to generate human language sentences using a Markov model.

(jump back up to table of contents)IV. A web crawiling example: the Wikipedia Philosophy game

Finally, let's turn our attention to exercise 2: adding a queue to do web crawling.

Note the brief discussion about queues from Unit 2 Lesson 2, section II.d.

A queue is typically implemented with a list, which begs the question: what then exactly is a data structure? A data structure is a technical object with certain specific usage patterns.

A queue in this case then is a list that is being used as a queue — by adding stuff at the end, and removing stuff from the beginning. The so-called FIFO behavior (first in, first out).

What are some alternatives to FIFO? In class we talked about LILO (last in, last out) — but, it turns out that LILO is actually the same behavior as FIFO.

The most common alternative to the FIFO model is LIFO (last in, first out). This behavior is used with a list to implement a data structure known as a stack. Stuff is both added and removed from the same "end" of the list.

By analogy, if queues are used to manage people showing up to get some kind of resource ("customers will be served in the order they arrive"), then a stack could be used for something like an organization that had to lay people off and seeking to do so fairly by recognizing seniority, letting go of newer people first.

In digital systems, stacks are used in many ways. How programming language parsers keep track of recursively nested syntax like parenthesis or HTML tags, and how the programming language keeps track of subroutines that call other subroutines.

We won't be using a stack for this exercise as the queue is the more appropriate data structure for web crawling.

The key operations of a queue:

-

pop(0): returns (and removes) the first item in a list. (We have not seen this command yet.) -

append(): adds an item to the end of a list. (We have already seen this command!)

The goal with the Wikipedia Philosophy game is to find the first link in the first main paragraph of the Wikipedia article that is not a link about word pronunciation.

After some difficulty in class with some subtleties of parsing this out in the HTML code of Wikipedia pages, I arrived at the following example:

import requests

from bs4 import BeautifulSoup

import urllib.parse

queue = [ "https://en.wikipedia.org/wiki/Software" ]

while len(queue) > 0:

url = queue.pop(0)

print("url: ", url)

if "/Philosoph" in url:

print("Done!")

exit()

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

main_div = soup.find(id="mw-content-text")

main_div_p_tags = main_div.find_all("p")

# Some nuanced parsing here to handle different cases.

# Wikipedia page HTML code is pretty uniform, but there

# still are some variations and exceptions:

first_p = None

i = 0

while not first_p:

maybe_first_p = main_div_p_tags[i]

if "class" in maybe_first_p.attrs and "mw-empty-elt" in maybe_first_p.attrs["class"]:

i = i + 1

continue

if len(repr(maybe_first_p)) < 20:

i = i + 1

continue

first_p = maybe_first_p

first_a = first_p.find_all("a",limit=1)[0]

href = first_a.attrs["href"]

new_url = urllib.parse.urljoin(url,href)

queue.append(new_url)

One issue I had in class was a typo in how we access the URL

from an <a> tag. I was accidentally

trying a.href — oops! wrong — the

proper syntax is the line above that looks like this:

href = first_a.attrs["href"]

Modify the initial value of queue to include

whatever URL you wish to start with, then run this, and you

should see it print out all URLs on its way ultimately to

"Philosophy" (or something that starts with

"Philosoph" — I had some issues with other

similar terms creating loops and thought it was sufficient just

to end for example on "Philosophy_of_language").

a. Is this really a queue?

But is this really a queue? It is an example of a list being used as a queue, but it's not the most interesting example because in this particular crawling case, we only ever have at most one item in the queue at a time!

A more interesting example would be if, on each page we visit, we found multiple URLs, and added them to the queue. In that case, the queue would be getting longer as we processed each page. This would be a way to build a corpus of all articles that are linked to some starting article. This would be a great way to automate the process of building a very large corpus, selecting links automatically by following a web of relations, rather than having to select a bunch of URLs manually (in a list, like we did above).

To modify the above example to do this, you could adjust the

code that looks for only the first <a> tag

only in that first <p>, and instead look for

all <a> tags within

all main_div_p_tags for

example. Then append() each URL to the queue, and

save the text to a file as before.

You would probably also want to add some stopping condition here. Otherwise your crawler would just keep going on forever! You could add a new variable, set it to zero, and each time you request a page, increment that variable. Then stop when you have accessed enough pages, whatever enough is for you.

I will leave this as an exercise for you to try, and I hope some of you do try this for the generation of your corpus for the project.

(jump back up to table of contents)V. Wrapping up & homework

Using the techniques here you should now hopefully be able to complete the tasks laid out in Unit 2 Lesson 2 homework.