Code as a Liberal Art, Spring 2025

Unit 2, Lesson 3 — Thursday, April 3

A Markov chain algorithm

Today we're going to implement a Markov Chain algorithm to generate language designed to emulate existing text. This algorithm works by analyzing a large body of text called a corpus. It builds a data structure to represent that corpus as a collection of words, and for each, a list of words that are statistically likely to follow that word. Then we can give the algorithm a word to start with and ask it to generate a series of words likely to follow it.

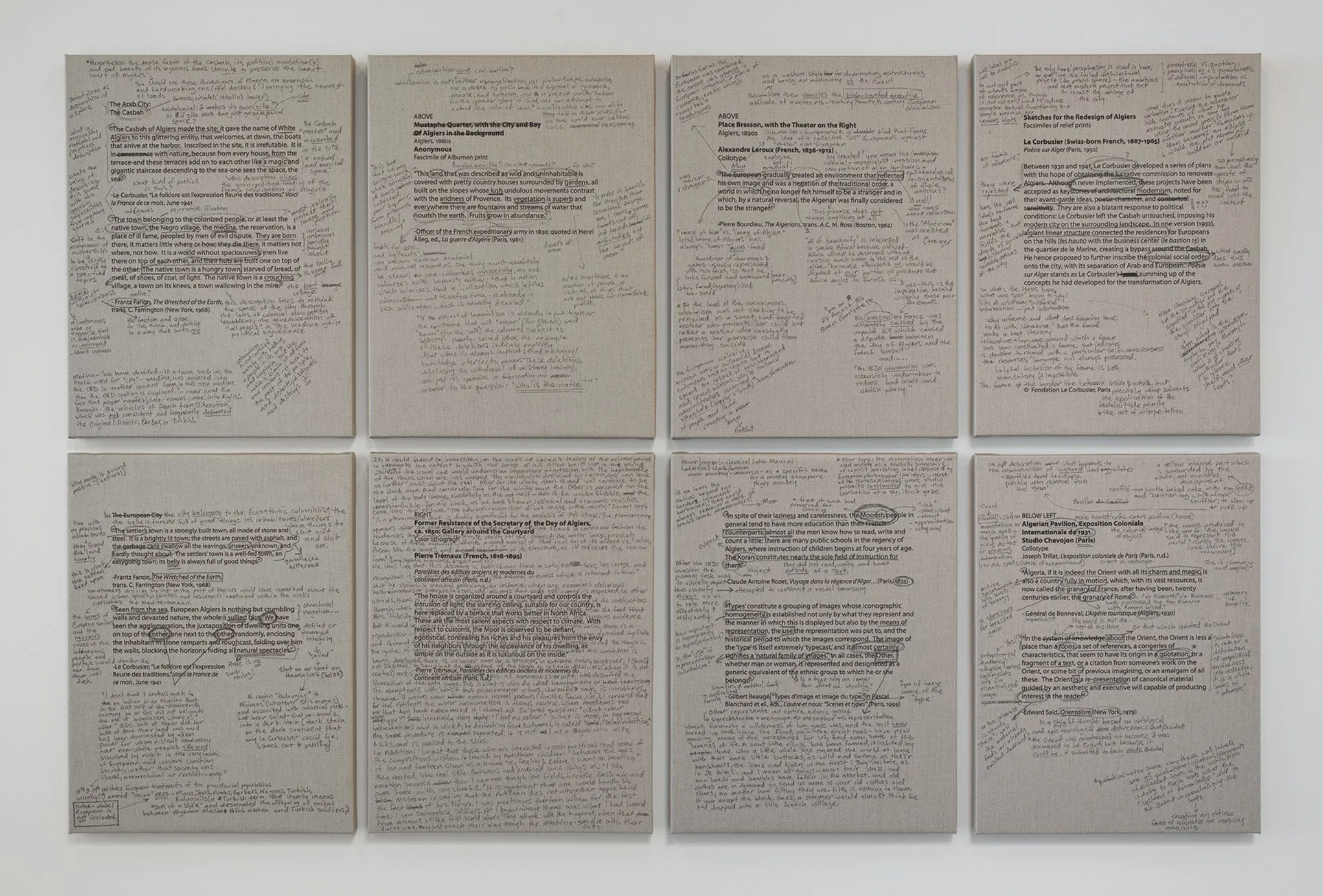

Meleko Mokgosi, "Wall of Casbah (2009 – 2014)". In this piece

the artist offers critical commentary about art and the museum

system by annotating the wall placards accompanying an

exhibition called "Walls of Algiers" at the Getty

Center. Read more

here or click to

enlarge.

Meleko Mokgosi, "Wall of Casbah (2009 – 2014)". In this piece

the artist offers critical commentary about art and the museum

system by annotating the wall placards accompanying an

exhibition called "Walls of Algiers" at the Getty

Center. Read more

here or click to

enlarge.

- Markov chain & Markov process explanation

- Markov chain data structure implementation

- An alternative data structure approach

- Homework and wrapping up

I. Markov process & Markov chain explanation

A Markov process is a type of algorithm that starts with a given state, and determines which state to go to next by considering all the possible next states from some model and the probable liklihood of each one to occur next based on that model.

A state here can be many different types of things: a step of a calculation or data processing procedure, a weather pattern, a step in a biological process, a turn in a game, a musical note, or many other things. Markov processes have a wide range of applications for modeling different kinds of phenomena.

We will be using a Markov process to generate human-like language. So in our case, the states will be words. We will specify a word to start with, and query a Markov process to select a next word, then repeat the process to generate a text.

A Markov process depends upon a specific data structure known as a Markov chain. A Markov chain stores all the various states that a system can be in, and for each state, it stores a list of possible next states, with a probability for each which represents the statistical likelihood of that state coming next.

In our case, since we'll be using a Markov process to generate text, and the states of our data structure will be words, for each word we will store a list of possible next words, and for each "next word" we will find some way to represent a probability for the likelihood that this next word would be chosen.

We will generate all our Markov chain data structure by writing a Python program to process a corpus, or a large body of text, which can be a single text file or multiple text files. For each word in the corpus, we will look at the next word that comes immediately after it, save it, and repeat the process for each instance of that original word. Ultimately, for each unique word, we will have a list of each next word that appears immediately after it in the text.

For each "next word", we will consider different ways to store some representation of how many times it occurs as an immediate next word. In this way, when generating next texts, we can select "next words" following the same statistical chance by which they are likely to occur in the original corpus, thus creating new phrasings that appear to us to be likely to occur in the original.

So this type of project involves two main steps: (1) write the code that analyzes a corpus and generates a data structure representing a Markov chain, and (2) implement a Markov process algorithm that uses this data structure to make randomized choices to generate text.

The goal is to generate new text that reads like it could plausibly be from the source text that we are starting with.

(jump back up to table of contents)II. Markov chain data structure implementation

Let's start by processing a text and generating a Markov chain data structure.

(jump back up to table of contents)a. Setup

For the below work, I will be using this very simple test case:

I like apples. I like oranges. Apples are red or green. Oranges are orange. Red, green, and orange are colors. Apples and oranges are fruits.Let's make a new folder for this work. I'll call mine

Unit 2 Lesson 3. Open a new VS

Code window and drag that in.

Now let's make a new text file with the above text to use

as a simple corpus for now. You can either make a new text file

and copy/paste the above text into it,

or download this file and

save it to the folder you just created. Either way, let's

assume we have a file now

called unit2lesson3_simple.txt with the

above very simple text.

b. Data structure planning

What type of data structure should we use for this? Think back to our data structure exercises from Unit 2 Lesson 2 Homework.

To restate, the goal here for the moment is to create a collection of words, and for each word we want to associate it with another collection consisting of each word that immediately follows it anywhere in the corpus. In our algorithm, we are going to want to take a word and look it up, to get a list of all words that might follow it, possibly also with some metadata about each next word.

How should we do it?

Pause here and consider your answer for a

moment before proceeding ...

.

.

.

The solution I propose, which these notes will explain how to implement, is a dictionary, where each key is a word, and each value is the list of words that follow that key word in the corpus.

For the above simple example, a few lines of this data structure then might look like this:

{ 'I': ['like', 'like'],

'like': ['apples.', 'oranges.'],

'apples.': ['I'],

'oranges.': ['Apples'],

'Apples': ['are', 'and'],

'are': ['red', 'orange.', 'colors.', 'fruits.'],

...

}

Some comments:

-

I am using the case directly from the corpus without

modifying it. I have seen others do it this way, but I have

also seen others capitalize or lowercase everything for

simplicity. I like doing it this way

because

'Apples'and'apples'are distinct, perhaps with some distinct semantic or syntactic value preserved by keeping track of whether they are capitalized or not. This has an added benefit of distinguishing in some cases between, e.g. Apple Computer and an apple fruit. -

I have left in all the punctuation for now. Similar to the

above, I have seen examples in which people remove it. Also

similarly to the above, I think there is some value in

preserving it as it will help us determine when to end

sentences, and now words that follow

'apples.'will be different from words that follow'apples'. Later on, we will see some examples where we'll want to remove or add special handling for punctuation, like in honorifics "Mr.", "Ms." which we do not want to consider the endings of sentences. -

Earlier in my explanation I described how we wanted to store

some statistical probabilities to represent likelihood of

occurence for each "next word" (the words in the lists). But

we don't see that here. What I propose we do for now is

simply store every instance in which a word occurs

as a next word, allowing for duplicates in the next word

list. Later, we will be able to use the

Python

random.choice()command, and that selection will accurately correspond to the statistical probability of that word occuring in the corpus. So when you see'I': ['like', 'like'],above, that is because'like'comes immediately after'I'twice in our source text. Later we'll discuss some other options for doing this.

c. Implementation

Now let's see how we can implement that. We will open the corpus as a text file, and start to process it in some way.

Make a new Python file in your working folder. I'll call

mine markov_datastructure.py.

Let's start easy: instruct Python to open our corpus text

file and make a new empty dictionary called markov.

file = open("unit2lesson3_simple.txt","r")

markov = {}

Next, let's read the contents of that file, replace the line

breaks ("\n") with a simple space character ("

") so everything is one on line, and then use

the split() command to break up a string by spaces,

effectively deleting all spaces and returning a list of each word in

the string in order.

file = open("unit2lesson3_simple.txt","r")

markov = {}

words = file.read().replace("\n"," ").split()

Next, let's loop over that entire list of words. Typically,

when looping over a list, we can use a for loop,

like this:

for word in words:

# do stuff ...

But in this case, as we are looping over each word, we also want

to kind of "look ahead" at the next word. That

isn't immediately possible to do with a for

loop, so instead we will use a while loop, setting

a looping variable i to 0, and repeating as long

as i is less than the length of the list,

incrementing i by one each time:

file = open("unit2lesson3_simple.txt","r")

markov = {}

words = file.read().replace("\n"," ").split()

i = 0

while i < len(words):

word = words[i]

next_word = words[i+1]

i = i + 1

Try running that. See any errors?

I get: IndexError: list index out of range.

The problem is that we are looping as long as i <

len(words), but in our loop we are trying to

access words[i+1].

The solution is to modify the stopping condition of our loop, stopping one item short, so our "look ahead" does not break:

file = open("unit2lesson3_simple.txt","r")

markov = {}

words = file.read().replace("\n"," ").split()

i = 0

while i < len(words) - 1:

word = words[i]

next_word = words[i+1]

i = i + 1

(Note that another way to do this would be to

use enumerate(), like this: for (i, word) in

enumerate(words): but I think the while loop

that I'm using here is a little easier to read.)

So this is looping over our entire corpus, but not doing anything yet. Now let's see how to actually build up our Markov chain data structure.

file = open("unit2lesson3_simple.txt","r")

markov = {}

words = file.read().replace("\n"," ").split()

i = 0

while i < len(words) - 1:

word = words[i]

next_word = words[i+1]

if word in markov:

markov[word].append(next_word)

else:

markov[word] = [next_word]

i = i + 1

This code is saying: If the current word is already in our

Markov map, then get the value associated with

that word (i.e. markov[word]) which is a list of

next words. Then, add the current next word to that list

with append(next_word). On the other hand, if the

current word is not yet in our Markov map, then add it

to the map, and for its value, make a new list containing only

the current next word.

That's it! Building a Markov chain data structure is as simple as that.

You should be able to run that without error, but we're still not seeing anything yet.

Try adding the Python "pretty-print"

library pprint, which displays complicated data

structures in nicely readable ways — a very useful tool

when working with complicated data structures.

import pprint file = open("unit2lesson3_simple.txt","r") markov = {} words = file.read().replace("\n"," ").split() i = 0 while i < len(words) - 1: word = words[i] next_word = words[i+1] if word in markov: markov[word].append(next_word) else: markov[word] = [next_word] i = i + 1 pprint.pp(markov)

You should be able to run that and see some output, the first few lines of which should exactly match the snippet I included above.

(jump back up to table of contents)d. Experimentation

Try to run this on some different corpora. Perhaps you want to try it on the large text file that you generated from your manually assembled list of URLs from Unit 2 Lesson 2.

Or if you'd like to work with a longer body of text, you might try something like Charles Dickens' David Copperfield, which I downloaded from archive.org. You can download this as a large text file here: unit2lesson3_dickens_copperfield.txt.

Keep in mind that for even a modestly larger

corpus, pprinting the entire thing will cause a

pretty massive scroll in your terminal window. Maybe instead you

can try sanity checking your results in a more targeted way. For

example, if you run this algorithm on the David

Copperfield file, try printing the data structure only for

certain words:

pprint.pp( markov["Copperfield"] )

or

pprint.pp( markov["Davy"] )(jump back up to table of contents)

III. An alternative data structure approach

The above is explanation is just one way to implement a Markov data structure. There are many others.

One variation in particular should be pretty understandable following the above discussion, and illustrates some trade offs pro and con in relation to the abeove.

This other approach is that instead of storing

a list of all next words, and

using random.choice() to select one, we could store

some quantitative data about each next word, and use that to

make our selection. For a given word, this could be a count of

the number of times each next word occurs, or it could be a

statistical likelihood of it occuring (which would be calculated

by dividing the number of occurences of a next word by the

total number of occurences of times the original word).

For this approach, instead of a list of next words for each word, we could store another dictionary, mapping each next word to its count or probability. So the data structure for our simple example above would look like this:

{ 'I': { 'like': 2} ,

'like': { 'apples.': 1, 'oranges.': 1 },

'apples.': { 'I': 1 },

'oranges.': {'Apples': 1},

'Apples': {'are': 1, 'and': 1},

'are': {'red': 1, 'orange.': 1, 'colors.': 1, 'fruits.': 1},

...

}

Here is the code to generate that:

import pprint

file = open("unit2lesson3_dickens_copperfield.txt","r")

markov = {}

words = file.read().replace("\n"," ").split()

i = 0

while i < len(words) - 1:

word = words[i]

next_word = words[i+1]

if word in markov:

next_words = markov[word]

if next_word in next_words:

next_words[next_word] = next_words[next_word] + 1

else:

next_words[next_word] = 1

else:

markov[word] = {next_word: 1}

i = i + 1

Note how now in the markov dictionary,

each key now points to another dictionary,

which includes all next words, each one pointing to its

occurence count.

The algorithm to generate text from this would be slightly more complicated than with the above, but might be slightly faster.

Perhaps the biggest distinction is that this version would require quite a bit less storage than the original.

In the original version above, each word from the source text is stored in the data structure. So the size of the data structure will be the same as the source text! In the version shown here, we're saving each word once with a count of its occurences, which would allow the total storage to be quite a bit less.

There are definitely some advantages and disadvantages to each approach! This helps illustrate the concept of a time/space tradeoff, a common topic within computer science.

(jump back up to table of contents)IV. Homework and wrapping up

For your homework this week, make sure you have the above code running (the original version above) and do some experimentation with different corpora. Details are explained on the homework page.

Consider also making some progress on the project by getting your corpus going, trying to run the Markov data structure code on it, and experimenting to see some bits of what that looks like.

Next week we will talk about using this Markov data structure to actually start generating texts.